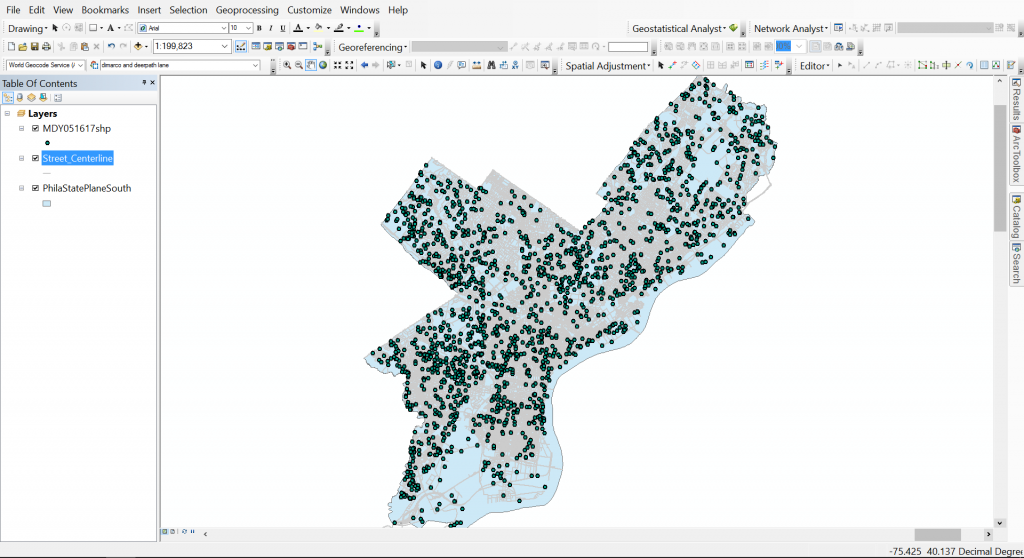

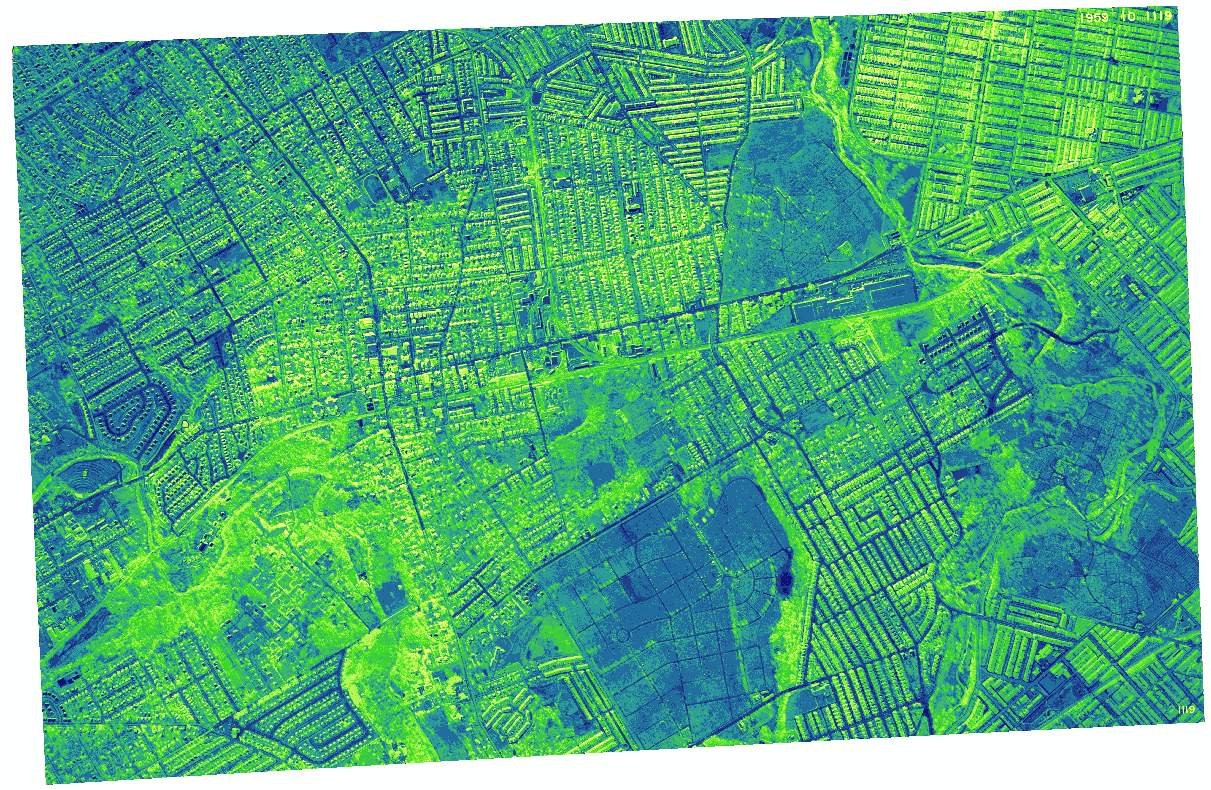

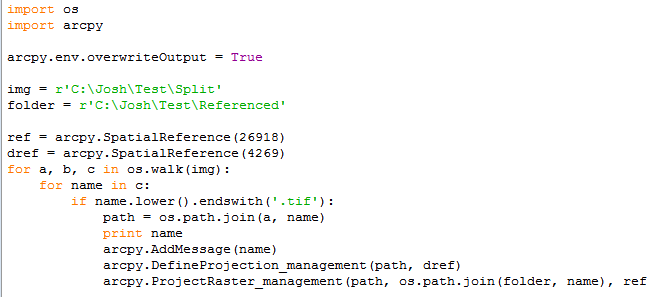

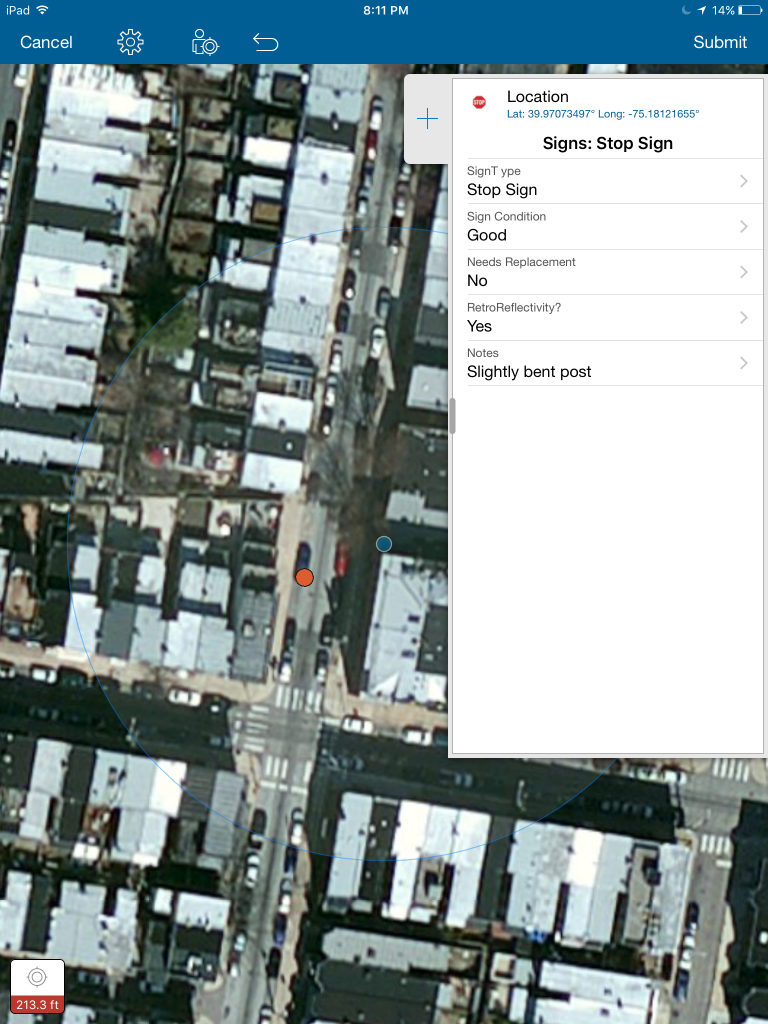

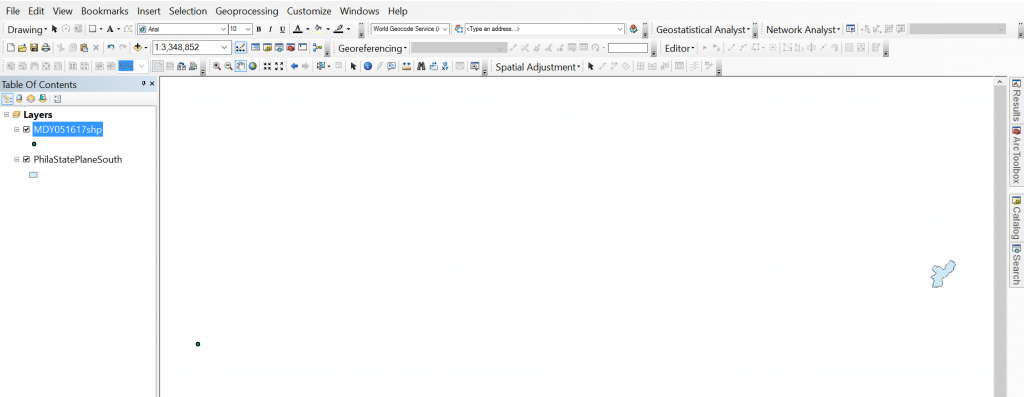

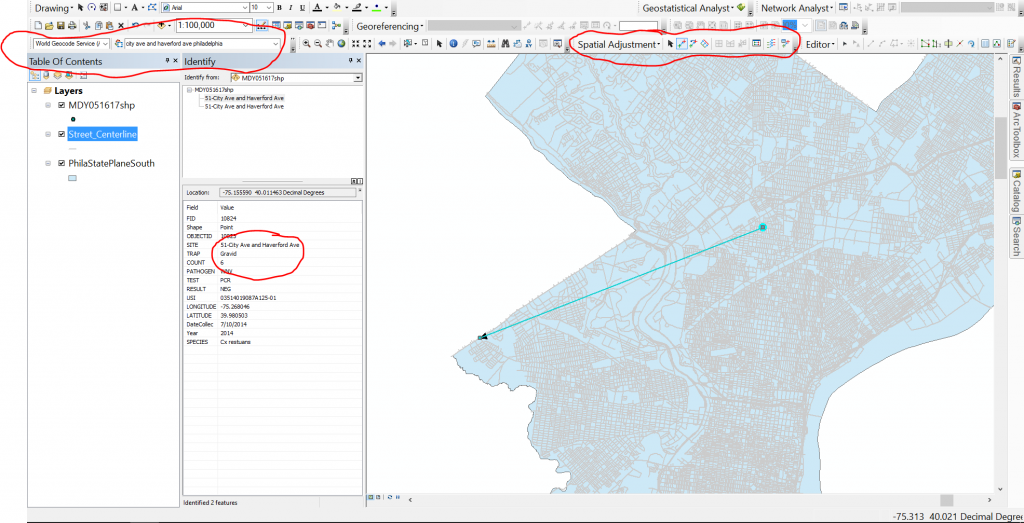

For the Philadelphia Public Health Department, I have been asked to track mosquito trapping results through the past 17 years. The data is submitted to a state website that then testing centers upload results of tests for West Nile and Zika viruses. Luckily, every input into the state website produces and Lat Lon so when pulling the test results, I am easily able to plot the xy’s. A little more difficult of a process is getting the point set on the correct scale and in the right location as the polygon layer. Even though both layers are projected into Philadelphia State Plane South (EPSG: 2272), there is quite a big difference in location and size as you can see in the image below.

So with that, the goal is to move the point set and georeference it to Philadelphia in State Plane South Philadelphia City Limits layer.

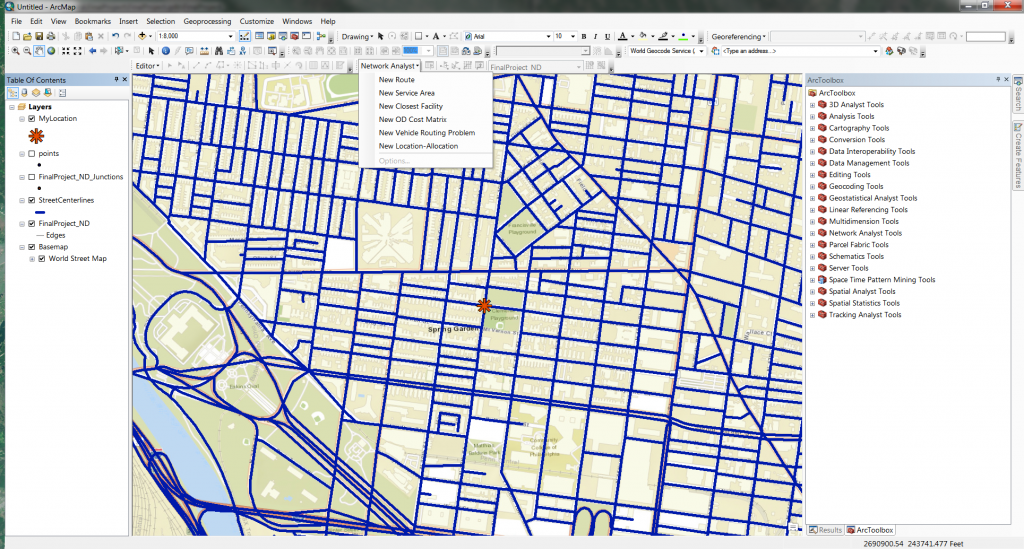

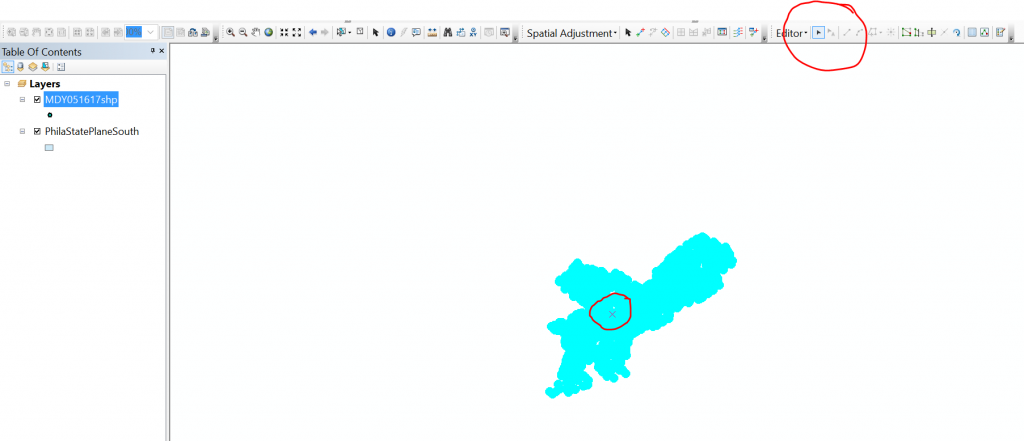

To start this process, you need to be able to edit the point set layer, which you can’t do if you have just plotted the xy’s. You’ll need to export the data as a new shapefile. Once you’ve done that, click on Editor -> Start Editing. If you don’t see Editor, you will have to add the toolbar from the Customize drop-down menu. In Editor, you may need to select the layer you plan on editing, in this case, the point set that was just created. Next, select all points by right-clicking on the layer in the Table of Contents and pressing select all. An X will appear in the center of the points layer and you can grab the entire point set and move it closer to the Philadelphia layer. If you are unable to grab it, it’s because you have to have the editor’s cursor arrow selected like in the image below.

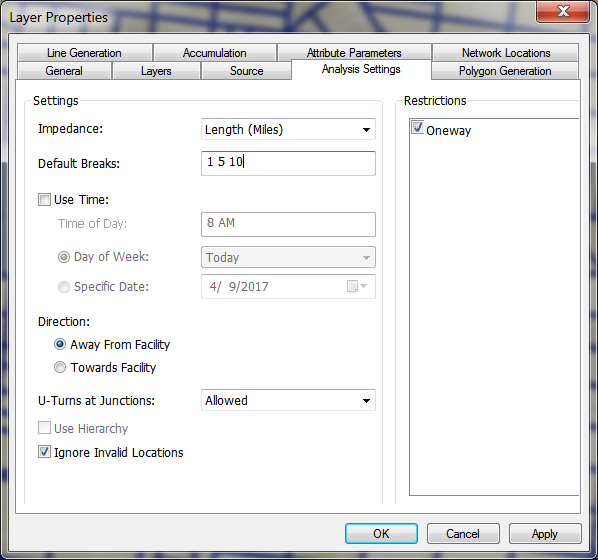

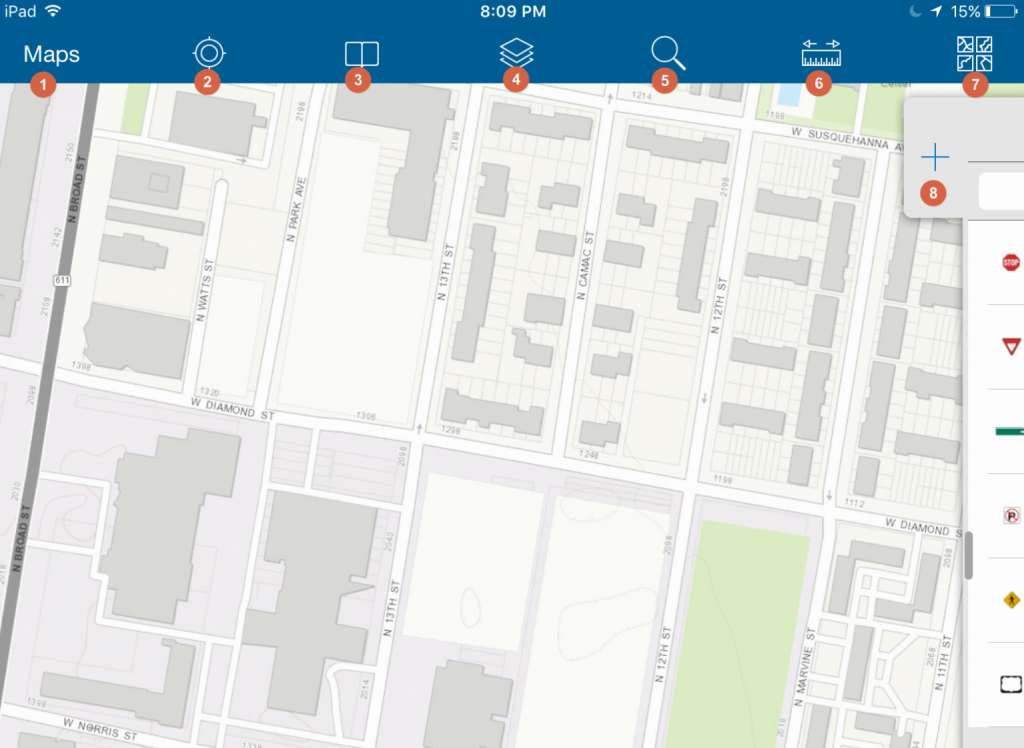

Now it’s time to georeference but using spatial adjustment. If you don’t see this toolbar, you need to select it in the Customize tab. Check your current coordinate system to ensure that you are in the ESPG that you want, in my case, it was 2272.

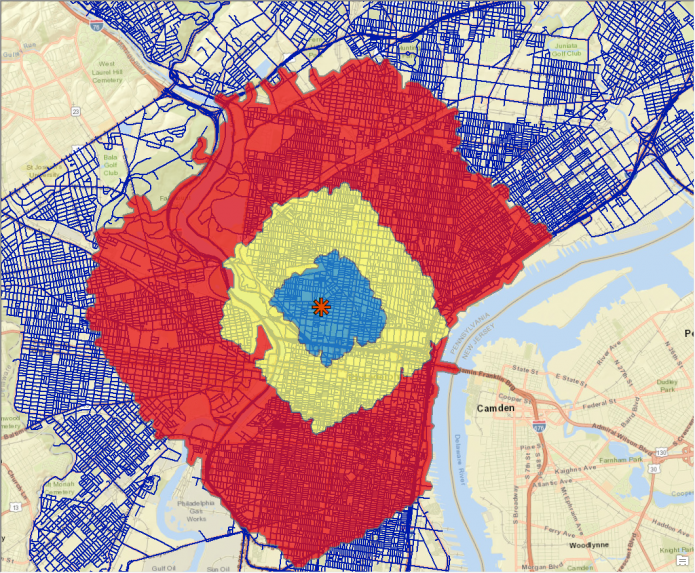

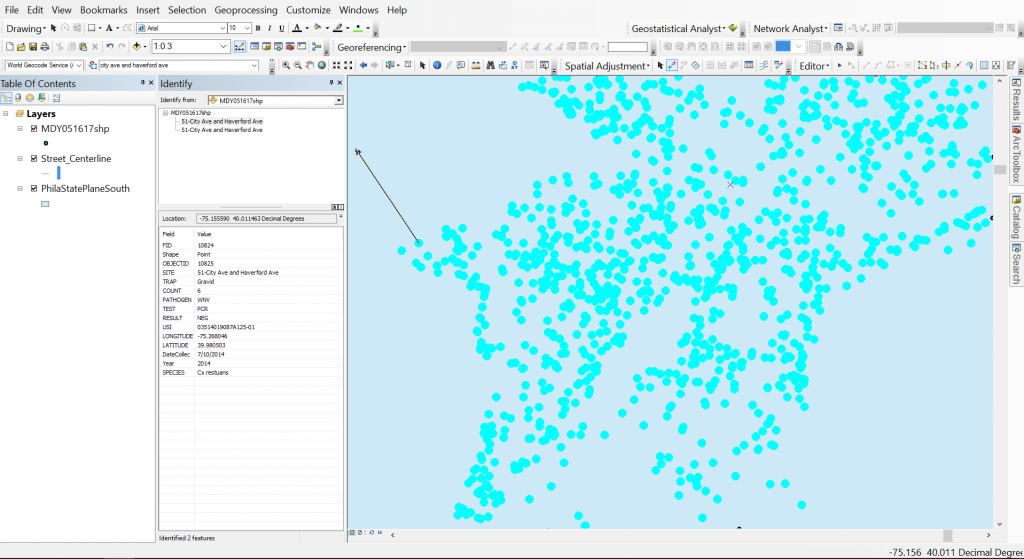

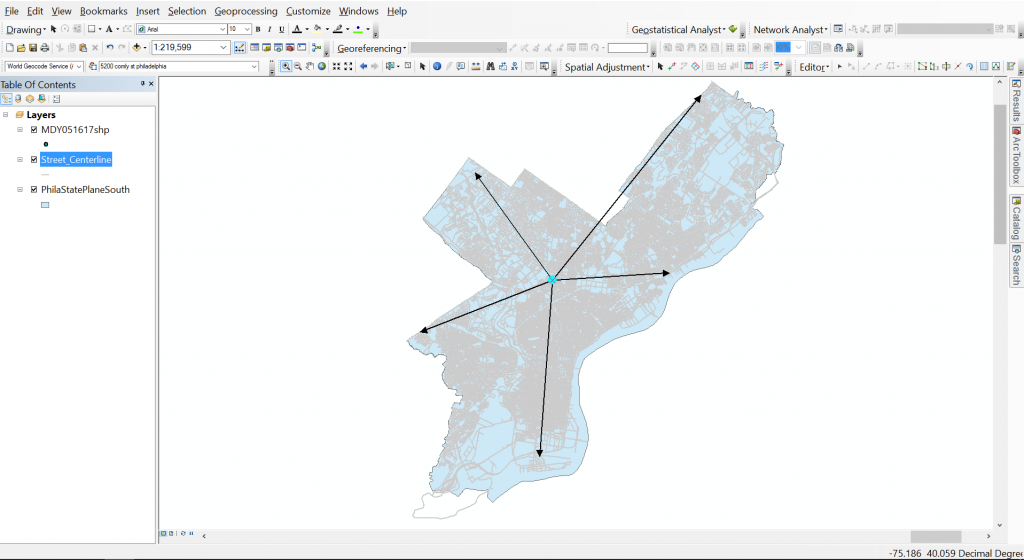

I used the information button to get the cross streets from my data set and then placed the first point for link adjust on that point. After that, I used the world geocoder service that ESRI provides as a basic geocoder to locate that area on the Philadelphia layer and click that exact location to drop the second point. This creates a line that will be used to measure the adjustment needed. The recommendation is to have at least 3 links but I use at least 4. You can see what that looks like below.

During this entire process, you want to make sure that the entire point set it selected. It is not fun to realize you left 2 points way off to the left and you have to start over. Placement of your links are vital to the success of your adjustment. Be sure to scatter them.

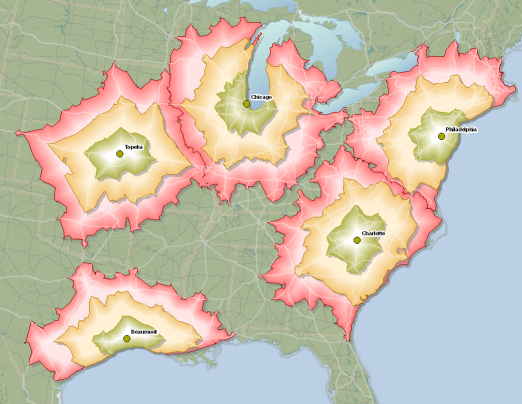

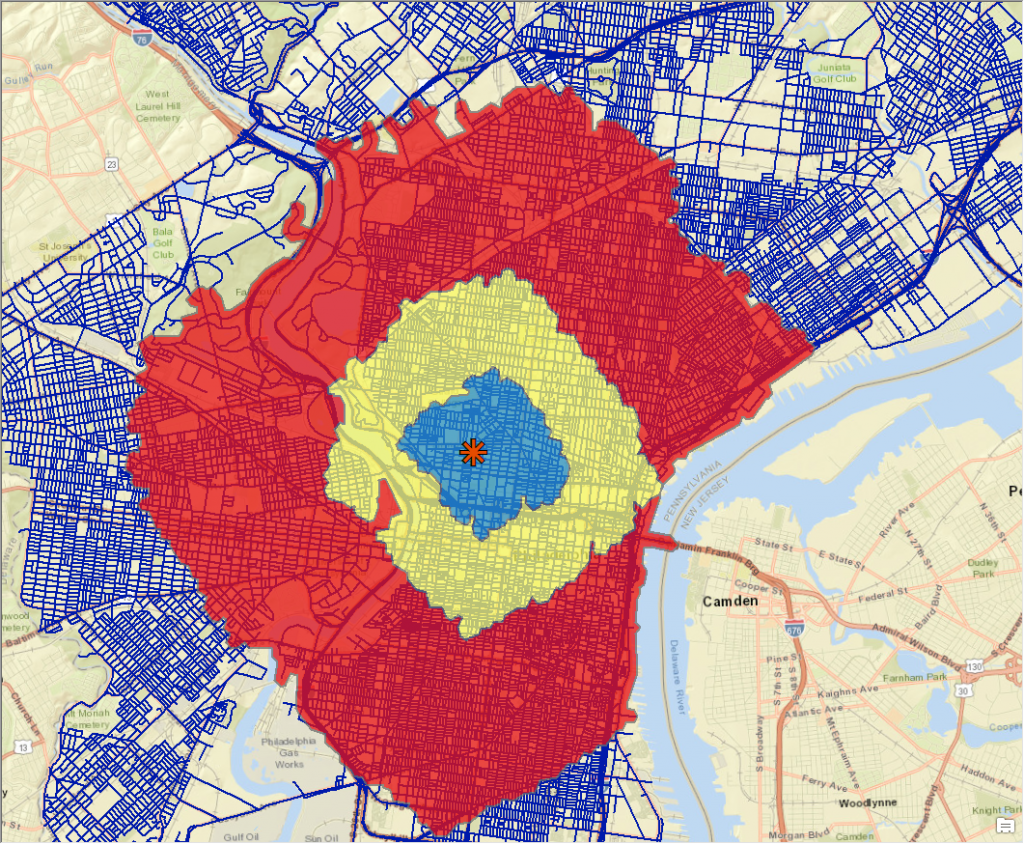

Once you’ve created at least 3 links, click the spatial adjustment drop-down menu and click Adjust. It is as easy as that. Here is the final point set. Make sure to save all your edits in Editor!