Photo by noor Younis on Unsplash.

The following is a guest post written by Maria Aghazarian, Digital Resources and Scholarly Communications Specialist at Swarthmore College Libraries.

Chances are you already maintain some kind of scholarly presence online, whether that’s on your personal website, Twitter, Google Scholar, Academia.edu, ResearchGate, or somewhere else. But individually maintaining these profiles takes a lot of time and energy that could be better spent in other ways.

ORCID is a multidisciplinary not-for-profit organization that provides persistent numeric identifiers that can streamline the way you present your research online. It takes just 30 seconds to register for an ORCID identifier. ORCID iDs can save you time, help you distinguish yourself in your field, and boost the visibility of your research. How does one number do all that?

By adding your ORCID iD to the systems you already use, you can authorize automatic updates to your ORCID profile, and use it as a way to link together your already existing profiles. There are 61 publishers and 22 funders that require ORCID iDs during the submission or application process. When the work is published/complete, these organizations will push updates to your ORCID profile with the details of the work, so you don’t have to.

Don’t want to spend a lot of time manually adding all your publications? ORCID has 12 different wizards designed to help you add works to your ORCID profile in just minutes. If you already maintain a Google Scholar profile, you can easily export your citations as a BibTeX file and import that file into ORCID, populating your profile in one easy step.

ORCID isn’t just for traditional peer-reviewed publications, though–use it to present all of your scholarship. With 39 supported work types, including encyclopedia entries, magazine articles, newspaper articles, websites, working papers, conference papers, conference posters, patents, artistic performances, lectures, software, you can represent the full range of your scholarship with your ORCID iD. To round out your profile, add membership and service for organizations as well as invited positions and distinctions.

Unlike your email, your affiliation, or even your name, your ORCID iD will never change. Your iD is persistent throughout your career, from student scholar to tenured professor, making it easier for others to discover and read your works. It’s reliable no matter how your name appears in publication. Your number is unique to you, and can be used to distinguish yourself from researchers with similar names. You can also add variations to your name to your profile (“also known as”) if you have published under several names or nicknames.

When your ORCID iD appears on an article you’ve published, it links back to your profile, presenting interested readers with a reliable representation of your scholarly work, no matter where they’re coming from. Since your iD will never change, it’s a stable URL, unlike a personal websites which could change location with site reorganization.

Once you have your iD, you can use ORCID to market yourself. Link your education, employment, grant funding, publications, and more, like an interactive component of your CV. You can also link out to other pages such as your website or your Google Scholar page. When someone is looking to learn more about you and your work, you can direct them to your profile. You can even add a link to your profile in your email signature, CV, grad school applications, or generate a QR code to include in conference posters and presentations.

ORCID uses OAuth which means that it can act as a single sign-on for many different systems — leaving you with one less password to remember and a way to connect these siloed profiles and accounts together. You can securely register with your Temple credentials, or link your Temple account to your profile for even easier sign in. Since your account is not tied solely to your Temple credentials, you will be able to access your ORCID profile even if you graduate or leave the Temple community.

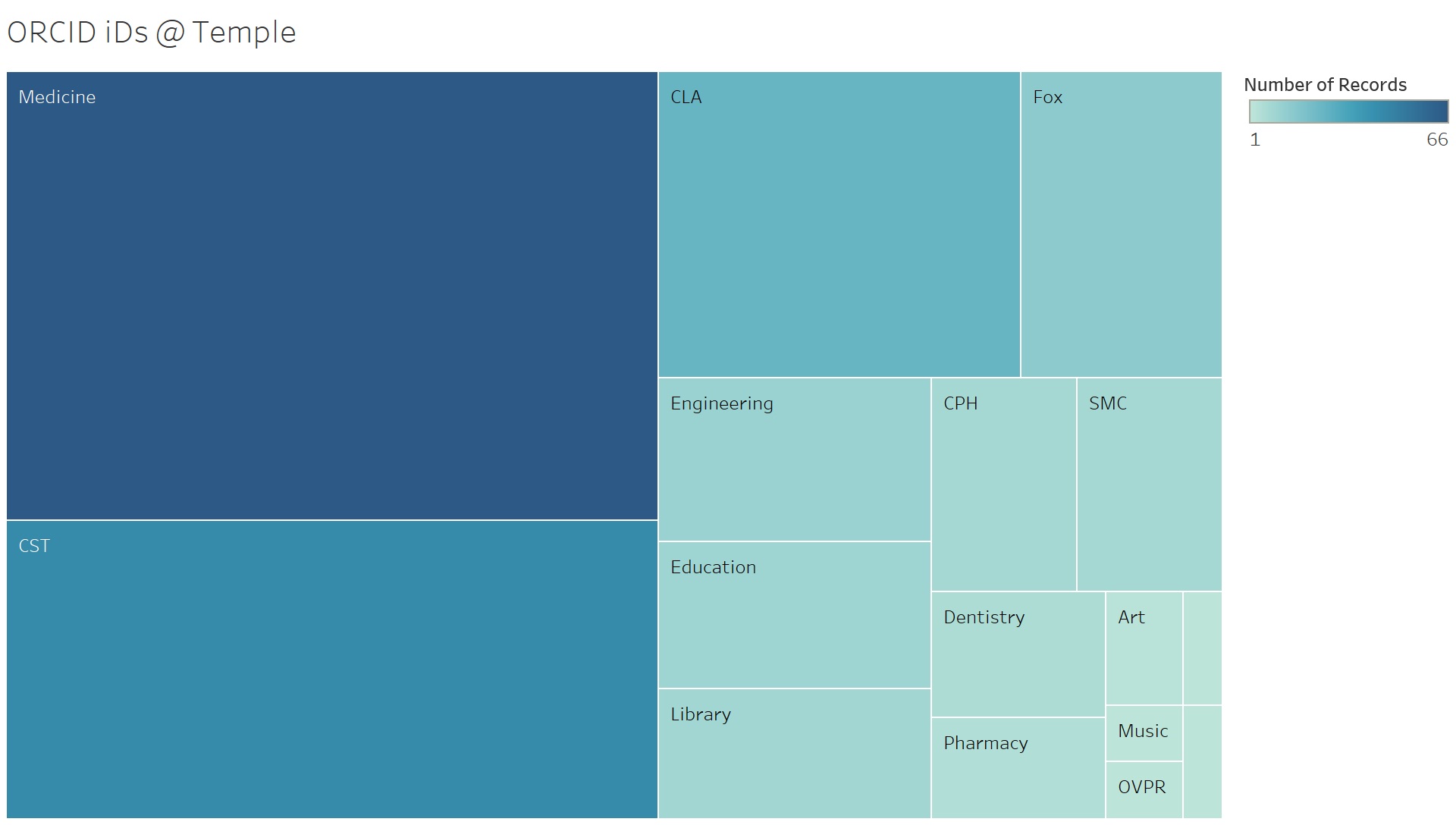

Temple University Libraries is proud to be an institutional member of ORCID.