When we opened Charles Library in August of 2019, we knew right away that we needed to increase the seating capacity in the building. During the day, a walk through the upper floors of the building gives the impression that we are at, or quickly approaching, full capacity.

The first physical space UX project I did in Charles was to gather data about the current furniture. In the fall, I worked with Rachel Cox, Nancy Turner, and Evan Weinstein to collect data on furniture use and preferences. We’re using that data now to recommend additional furniture and space improvements.

Methods

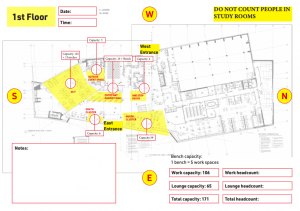

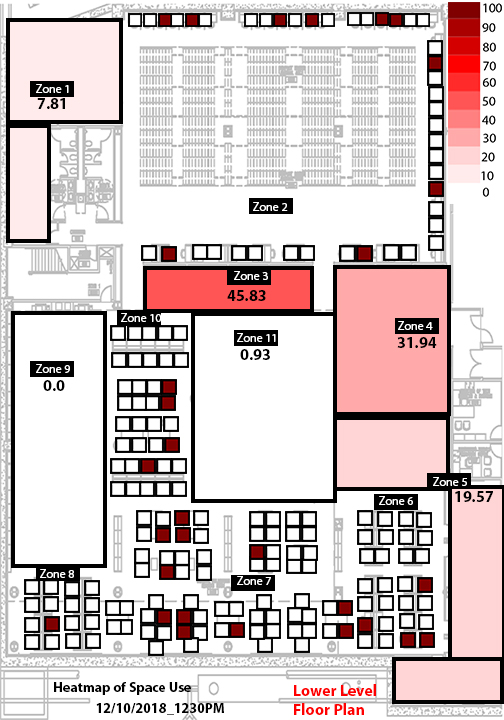

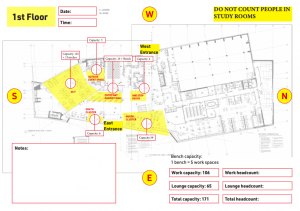

To kick things off, Rachel and I conducted floor-by-floor walkthroughs, counting the number of seats occupied and recording observations about furniture use. We carried out the daily walkthroughs for one full week in October. We entered the headcounts and observation notes on a worksheet. The student building supervisors handled data collection at night and on the weekend.

Worksheet for recording observational data

The sweeps gave us a sense of how full the building was at different hours and how students were using the space. But, we also wanted to know if students liked the new furniture.

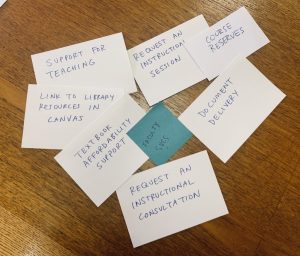

To find out more about this, Rachel and I built a survey that asked what students came to the library for, if the furniture met their needs, and what they liked and disliked about it.

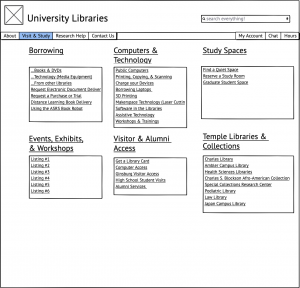

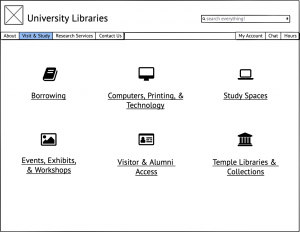

survey p. 1

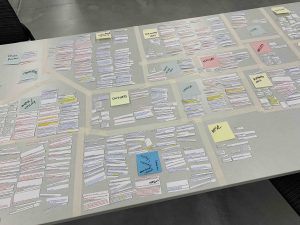

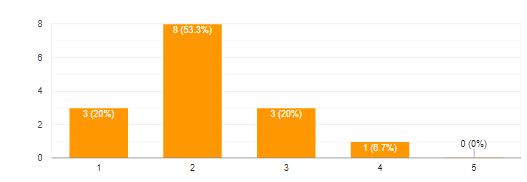

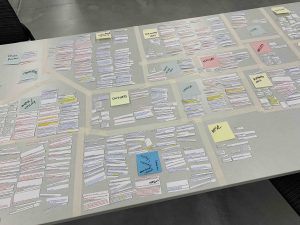

We got 213 responses. Rachel and I analyzed over 600 survey comments over a couple of days, sorting the comments into categories.

Survey analysis

Findings

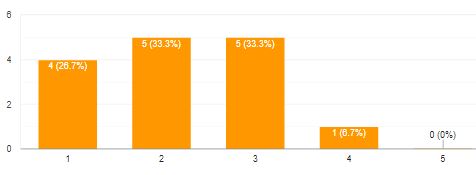

The majority of students (78.4%) came to the library to study alone but studying as a group was also very common (48%). Other reasons to visit included resting between classes, finding a book, going to the cafe, visiting the Scholars Studio, Graduate Study Area, Special Collections, or Student Success Center, and hanging out with friends. 54% responded that the furniture didn’t meet their needs.

The over 600 survey comments provided us with more detailed information about what students wanted:

- More seating generally

- Comfortable seating and “cozy” spaces

- More spaces to support individual study

- An environment mostly free of distraction that also allowed some level of chatting/interacting with others

More seating

The headcounts revealed, somewhat surprisingly, that we rarely reached over 50% occupancy on any floor of the building, even during the day when the building looks full. Survey comments told us that students sometimes cannot find a seat in the library, and a few asked specifically for more seats. Students also described the existing seating as being “too close” to other students and too small for spreading out their work. Lack of personal space at the large open tables was a common thread throughout the survey comments.

Privacy

Right now, the open study areas in Charles Library are furnished mostly with open seating tables. This is a dramatic shift from Paley Library where students were accustomed to semi-private, partitioned desks and armchairs with individual side desks. Students miss the private seating they found in Paley; one commented,

“Please, provide some cubicles or private study spaces. It takes so much time to book a study room because they’re rarely available. The cubicles that used to be in Paley fit my needs well and I have not been nearly as productive since moving to Charles.”

Others spoke directly about distractions, both noise and visual, at the open table seating,

“…perhaps these spaces could be furnished with … divided desks to remove distraction from the (sometimes embarrassingly noisy) library environment and to center focus on the work I came here to do…”

Comfort

Another common theme was the desire for comfortable seating. Students frequently asked that we add “comfortable” furniture and make the space cozier. “Comfortable” and “cozy” were used to describe soft seating like beanbags and couches, as well as upright tables and chairs. The comments overall conveyed a strong preference for furniture that supports work, and in many cases, “comfort” meant seating that is ergonomically designed for sitting and doing work over extended periods,

“[I want] couches and seating like you would find at a cozy coffee shop. The furniture I have used has been very uncomfortable and I cannot do work for a long period of time without something in body aching. The library is absolutely gorgeous, but the furniture is probably the biggest complaint…”

Even our lounge furniture doesn’t meet students’ expectations for comfort. They described the lounge chairs and small polygon tables as too “low to the ground” and lacking “function.” One student demonstrated their distaste for the lounge furniture with a drawing of a stick figure hunched uncomfortably in one of our lounge seats to reach a laptop on the small polygon table. Others asked for armchairs and other soft seating,

“I don’t really feel that there are any comfortable arm-chair like seats at the library like there were at Paley. I can’t get comfortable anywhere, and as a result, I don’t feel welcome to stay long…”

During the building walkthroughs, we mostly saw students using the lounge seating to do work, rather than for socializing or other short term activities more suitable to lounge seating. But, the lack of a work surface at those seats meant that students either hunched over the small tables or created work surfaces using benches, laps, window ledges, or a second lounge chair. The survey made it clear that students do not like the lounge seating because it’s not ergonomically suited for doing work.

Conclusions

Despite increases in group and collaborative work, student space needs are still strongly tied to individual study. The survey showed that students primarily come to the library to study alone and they want to do that in a comfortable environment that is relatively free of distraction.

The survey provides us the evidence for prioritizing future purchases of furniture, including pods or carrels for individual study. But we’re also exploring ways of re-configuring our current furniture to provide semi-private spaces with minimal distractions. Table partitions and different furniture arrangements can create barriers, providing students with a psychological sense of privacy.

Extra seating for exam period

When we added the individual table seating around the ledge during exams, it was immediately popular. Though these seats are in high-traffic areas, they allow students to face away from others, providing a buffer from visual distractions.

We also want to get a better understanding of study room use and continue to gather student input as we select potential new furniture.