Emtinan Alqurashi, Ed.D., Jennifer Zaylea, MFA

Generative AI has revolutionized the way we interact with technology, opening new doors to faster and more efficient organization, and allowing more time for the creative and conceptual aspects of learning. However, there are considerable challenges that need to be addressed, especially when they could potentially impact how learning works in our classrooms. In our first blog of the AI series, we discussed what generative AI is and invited you to explore one of these tools (i.e., ChatGPT), in our second blog in this series, we explore some of the benefits and possible pitfalls of using generative AI in the student learning process.

Some benefits of incorporating generative AI into the student learning process:

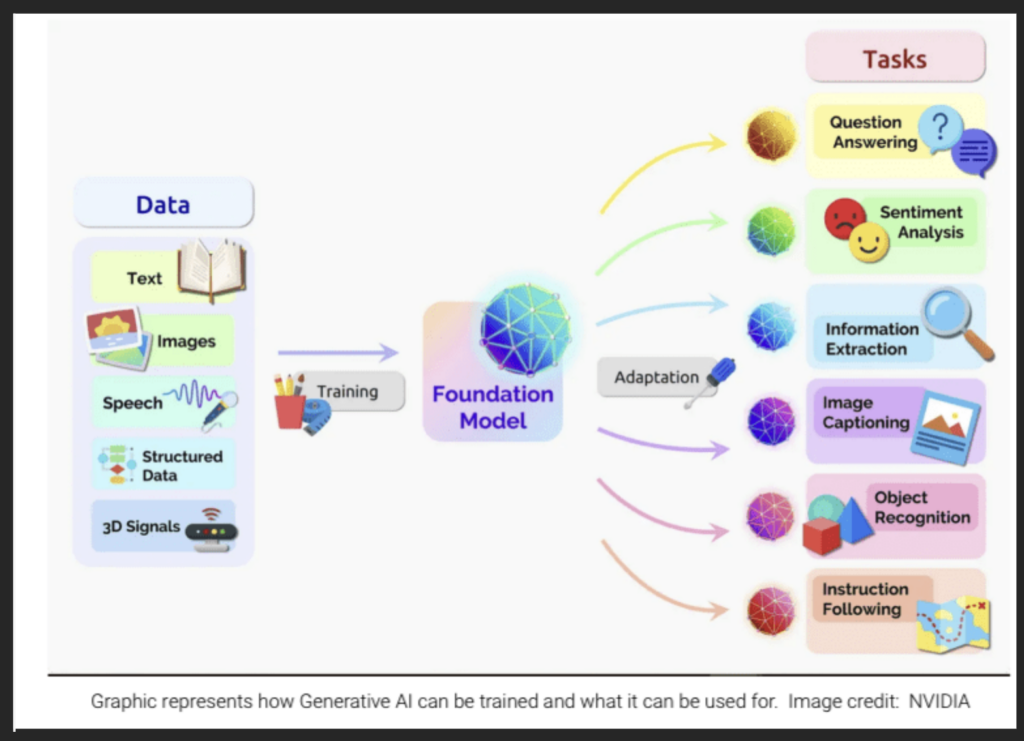

- Access to large quantities of information. Generative AI is trained using a vast amount of information from a variety of sources. With this access to an enormous amount of information, it responds to a wide range of language-related tasks. Used productively, the greater access to information can potentially help students gain multiple perspectives on a topic, and spark inspiration that leads to greater creativity and ideation.

- Speed of responding or processing. Generative AI provides quick responses to queries, prompts, and requests. This is particularly helpful when we need real-time responses or quick turnarounds for questions or requests. Rather than spending excessive time searching for information, students can gather information efficiently, and spend time focusing on comprehension and analysis of information. Ultimately, this helps them advance to more complex thinking and learning.

- Automation of routine tasks. Because generative AI can produce human-like text, it can be used as a starting point for any type of content from drafting emails and articles to creating social media posts or course outlines. It can also be used as an editor to develop unstructured transcript or notes into a well-structured text. Students can save time and effort in the organizing and editing processes to allow them to focus on more creative and strategic aspects of their work.

- Conversational manner of speech/writing. The human-like conversational ability makes AI an enjoyable discussion partner (but also provides serious concerns – see pitfalls below). In fact, generative AI can refine its outputs in response to your prompts in an ongoing discussion, helping you and your students to iteratively refine your thinking. For this reason, it can be very valuable to learn simple prompt engineering, which is the process of refining input instructions to achieve desired results. This can enhance the conversational capabilities of generative AI.

- Assistive technology. Generative AI has the promise to improve inclusion for people with communication impairments, low literacy levels, and those who speak English as a second language. It can also improve ease of communication and comprehension in a variety of ways.

Possible pitfalls when incorporating generative AI into the student learning process:

- Engenders a false sense of trust. Generative AI’s conversational manner can be concerning as it can create a false sense of trust in the content it delivers. We must be aware that generative AI may disseminate inaccurate, biased, or incorrect information, and we must caution individuals against treating it as a source of truth.

- Can generate inaccurate information. Generative AI has the ability to produce convincing responses to prompts and can offer properly formatted citations. However, it may not always provide accurate or reliable information as it has the ability to replicate outdated information, misinformation and conspiracy theories that exist in its data set. In all cases, the responses formulated by generative AI require careful vetting to ensure their accuracy. Therefore, it is important for students to take responsibility for verifying the accuracy and reliability of any information they obtain. This ensures students are not only learning correct information but also developing critical thinking and research skills that will serve them well in their academic and professional lives.

- Can generate biased information. Just as generative AI can replicate inaccurate information, it can also replicate biased information. For example, if the training data used to train a generative AI contains biased or skewed information, the AI may inadvertently reproduce that bias in its results. Again, careful vetting of information is key to productive use of these tools.

- Human authorship. Generative AI can produce responses that closely resemble pre-existing text, leading to an inability for the user to distinguish between AI-generated and human-authored text. Additionally, it is unclear which sources generative AI is using. This obfuscation of human authorship can make it challenging to attribute sources accurately. In fact, if you ask some generative AI tools to add references with citations and links, it can invent false sources, a phenomenon that is called hallucinating (although others are already becoming more sophisticated and can pull citations from real sources).

- Simulated emotional responses. Generative AI can, in written form, simulate emotional intelligence, empathy, morality, compassion, and integrity. Devoid of nuance, AI simulates an emotional output by scouring the troves of text and training that has been provided to offer what seems most likely to be an accurate emotional response. It will be important for users of these tools to be cognizant of the fact that simulated emotional response is not the same as true interpersonal human connection and understanding.

- Lacks context. When it comes to specific courses, class discussions, or more recent events, generative AI may not be able to establish connections between writing or arguments as they relate to the context of a course.

- Proliferation of harmful responses. With prompt engineering, users can circumvent the safeguards put in place to prevent harmful, offensive, or inappropriate information from being proliferated in responses. Additionally, there are concerns that misinformation (inaccurate) and disinformation (deliberately false) will be shared as accurate and reliable information.

- Can potentially widen the education gap. The digital divide that can already exist between students with means and those without may be widened as more powerful AI tools are placed behind paywalls. In addition, gaps in digital literacy skills may exacerbate the widening of the education gap.

While generative AI brings numerous benefits to students, the potential pitfalls must be considered. It is crucial for students to verify information, develop critical thinking skills, and exercise caution when relying on generative AI. These aspects are integral to developing digital literacy skills, which will be explored later in the series.

In next week’s post, we will discuss how to decide about the use of AI in your class. If you have questions about generative AI and learning, book a consultation with a CAT specialist.

Emtinan Alqurashi, Ed.D., serves as Assistant Director of Online and Digital Learning at Temple University’s Center for the Advancement of Teaching.

Jennifer Zaylea, MFA, serves as the Digital Media Specialist at Temple University’s Center for the Advancement of Teaching.