Tags

- ACRL

- ai

- ALA

- ARL

- artificial intelligence

- assessment

- assessment committee

- assessment of learning

- ChatGPT

- collections

- collection use

- community of practice

- conferences

- continuous improvement

- culture of assessment

- data-driven decision-making

- data analysis

- faculty

- goals

- information technology

- instruction

- interviews

- iterative design

- library space

- metrics

- open access

- organizational change

- organizational culture

- process improvement

- qualitative research

- quantitative research

- reference

- space

- space design

- staff development

- statistics

- strategic planning

- students

- surveys

- technical services

- user experience

- users

- user studies

- virtual reference

- website

Archives

Subscribe to Blog via Email

An Assessment Librarian Reflects on 2015

It’s the new year, and a time of reflection. As for me, I’ve been thinking about this ‘new position (relatively) at Temple and changes since I began in 2014. I love what I do, and here are some of the reasons why.

Assessment is about asking questions. We are always asking why. Data is not the answer, it merely prompts us to ask more questions. Assessment is about growing an organizational culture at the Library that encourages the sharing of our expertise and insights as well as the data we collect. We don’t take our value for granted and we are willing to change to improve services to our users.

Assessment affords us an opportunity to be transparent in our decision-making. This isn’t always the case, of course, but we do use data to help us understand technology use and profile our technology offerings based on that, or determine the best hours to service public desks and how.

Assessment is about building a technical infrastructure that will allow us to learn more about how our users connect to the library. This has been a long and complex technical challenge, but here at Temple we are able to connect demographic data from Banner (user status, college) with transactional data (circulation, interlibrary loan, use of computers and Ezproxy), to improve our understanding of how scholars from different disciplines use library services and resources.

Assessment is about continually learning new technologies and skills, from a less-used Excel formula to regular expressions to SQL. More importantly, for me anyway, it’s learning how to talk the language of technology colleagues so we can most effectively work together.

Assessment is about partnering with all the departments in the library, as well as with external units on campus like IT, Institutional Research & Assessment and the Institutional Research Board.

It’s about helping to develop a community of practice in the Philadelphia area – colleagues at Penn, at Drexel, Bryn Mawr, Philadelphia Community College and the many others who contribute to our PLAD meetings. In this blog I have had the opportunity to profile numerous meetings and conferences on library assessment, regionally as well as nationally.

The job is never dull and varies from qualitative research projects to working with colleagues on the strategic planning retreat. A recent workflow analysis pulled together staff from three departments to improve our process for rush reserves. This process of collaborating toward a common goal was instructive for all of us and will result in improved service for faculty and students.

Slowly but surely, we are indeed building a culture of assessment here at Temple Libraries and beyond. Diane Turner, curator of the Blockson collection said to me recently, “I think about assessment every time we have an event.” Thanks for that small indicator of change!

Here’s to a new year with more insights and improvements in the service of the Library’s users.

Assessment Drives Decision-Making Process for Health Science Library’s Technology Offerings

Last year I profiled Cynthia Schwarz, Senior Systems & Technology Librarian on her assessment of computer use at the Ginsberg Health Services Library. She was using the software LabStats to analyze the use of computers at the library – the software allows us to understand not only the frequency of computer use and where those highly used computers are located, but the school and status of our computer users.

This year she’s taking her efforts further, using this data to improve technology offerings and saving student money along the way.

NT: What was your question? What are you trying to understand?

CS: We know that technology needs are constantly changing. The Library currently provides over 150 fixed computer workstations, 65 circulating laptops and 32 study rooms equipped with varying levels of technology.

We had anecdotal evidence, based on observation, that not all our computers in the Library were being used. I wanted to understand more about what technology is used and how heavily – so we don’t replace less used equipment unnecessarily. That’s expensive and we could deploy those funds elsewhere, serving students better.

NT: What kind of data are you collecting?

CS: The Library has been collecting statistics on the use of library-provided technology since 2014. These data include the number of logins to fixed workstations, the number of loans for each piece of equipment, including laptops and iPads, and the number of print jobs sent to the student printers from fixed workstations and from the wireless printing service. As the hardware comes up for replacement, this collected data serves as an important road map in making decisions about what to replace.

I wanted to use statistics to assess the need for replacements of all this equipment. For instance, in the current fiscal year, 44 public iMac computers were up for replacement. Based on the data collected, no more than 21 of the 44 computers were ever in use at the same time. I determined that some of the iMacs could be eliminated without a felt impact to students.

But, we couldn’t simply remove computers at random. We looked at the specific machines that were used and where they were located.

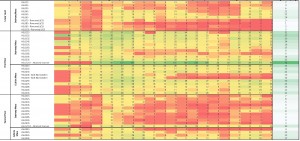

The heatmap shows pretty clearly that there are “zones” in the library, the 2nd floor, for example, where the computers were less used. These were the computers by the stairway, as well as computers inside of cubicles. In this area, students were more likely to use their laptops at bigger tables.

In the end, we reduced number of iMac workstations from 44 to 27, saving the library $32,900 in the first year and $2,700 each year after.

NT: Do you have any other assessment plans related to technology use?

This example is just the first piece of a much larger puzzle of making decisions based on data to determine how resources should be allocated to best support students moving forward. Throughout the spring 2016 semester, the library plans to review the fixed PC workstations, the study room technology and the laptop and iPad loaners.

The second step is to consider how to re-deploy those resources, i.e. money saved from hardware replacements. The Library is now able to invest in different technologies such as Chromebooks, 3D printing and scanning and specialized computers with high-end software.

NT: How do you learn about what those new technologies should be?

Because of its size, the health sciences library is a perfect testing ground for new technology offerings. We stay current with what other health sciences libraries are doing, we carefully analyze our usage data, and we talk to students.

NT: Thanks, Cynthia. Your work is a great example of using data to inform decision-making. This kind of analysis could be applied at the other library locations too – I imagine the technology use profiles at Paley, Ambler or SEL would look quite different — that would generate some interesting questions too.

Assessment in Atlanta

I recently attended the Southeastern Library Assessment Conference, a great forum that provides us an opportunity to hear about (mostly) academic library assessment activities in the Southeast and beyond. Several sessions related to a topic of special interest to libraries these days: How do we identify the measures that demonstrate value – that are meaningful for libraries?

The conference organizers were quite brave in kicking off the conference with comedic duo Consilience with Pete and Charlie. Both have day jobs at Georgia Tech. Pete Ludovice is an engineering professor by day, a stand-up comic by night. Charlie Bennett is a humanities librarian.

Provided with Amos Lakos’ article on creating a culture of assessment as fodder, their back and forth riff on the future of libraries (is there really a need?) and assessment was provocative and fun at the same time. Are there ways in which assessment actually hinders innovation? The take-away: Start-ups take time. If you want innovation and creative thinking to thrive at your library, do assessment with a “loose”, backed up way. Don’t be Draconian about the numbers at the start. Assessment is not just about statistical significance. Embrace failure – we fail all the time and need to learn from that.

That said, we assessment librarians do like to collect numbers. So how do we manage all of the data that we are collecting?

Wrangling the Megalith – Mapping the Data Ecosystem

Mark Shelton reported on such a data identification and management project at Harvard University, where he is Assessment Librarian. Understanding the data that is available, where and how it is collected, and how elements relate to one another, Mark suggests, is a taxonomy problem – and one that librarians are good at devising. His approach thus far is examining many sources of data, meeting with staff to talk about assessment and the data that they are collecting. The data being wrangled comes from:

- 73 libraries,

- 931 staff and

- 1364 circulating collections

- 78 borrower types

And the data taxonomy comes with its own clever acronym:

COLLAPSE

- Collection

- Object Type – books, film, coins,

- Library – physical places associated with schools, rooms, carrels

- Loot – Projected, real, strategic alignment – Harvard has thousands of endowments

- Activity – reference, learning, digitization

- People – 78 borrower types

- Systems – Internal, external, citation, collaboration – data in all these systems

- Events – transactions for reference, article downloading

With this kind of complexity, even analyzing a data set as seemingly straightforward as circulation data is not for the faint of heart. But it’s essential work. We must map our data to what we say are our values. We must also recognize how this data can contribute to strategic planning efforts, although different stakeholders will want to look at and interpret the data in different ways.

Metrics with Meaning

The need for recognizing our different stakeholders was a message in another presentation from Lisa Horowitz, Massachusetts Institute of Technology, Kirsten Kinsley, Florida State University, Zsuzsa Koltay, Cornell University and Zoltán Szentkirályi, Southern Methodist University

Their session had two goals: the first to discuss strategies to work with publishers as they update requests to libraries for metrics that are meaningful – to libraries and to their respective audiences. The impetus for the question was the request from Peterson’s College Guide for data on the number of microforms owned by the library. What does the data we publish about our libraries help, or hinder our marketing of the libraries?

Publishers have interests too. They have audiences that are different than that of librarians and institutional administrators. Our challenge is to work with publishers to identify metrics that are useful to prospective students, for instance,

Through a set of messages to listservs, the authors gathered ideas about library metrics that could be useful to prospective students. A sampling:

- Is there 24/7 available space for study and access to library resources?

- Are laptops or other equipment is available to borrow at the library? Textbooks placed on reserve for their use?

- What kind of reference support is available, and how – in person, virtual?

- Students might prefer to know about the use of the web site and institutional repository before they’d care about the number of microfilms.

- What are the unique collections owned by the library? What is the ratio of collection size to student population?

It’s clear that there is no “one size fits all” set of metrics. A school with a focus on distance education attracts students with some facts (online journals, virtual reference) while on-campus students will look for study and collaborative work space in the library.

The presenters pointed out how metrics project an image. What we want to avoid is the potential for an outdated image of library services and resources to persist, influencing how people perceive the library. The challenge is overcoming intertia and re-considering the collection of entrenched statistics. And coming to consensus as to what those measures would look like.

Many of the presentations are available on the Georgia State institutional repository at: http://scholarworks.gsu.edu/southeasternlac/2015/

The Library in the Life of the User

I had the privilege last week of attending the OCLC Research Partners Meeting on The Library in the Life of the User – We shared reports on the current state of user research, with a particular focus on qualitative methods – interviews about work practice, mapping excercises, observational studies. While these studies are yielding some fascinating insights into how our users are finding and organizing their research, the most provocative talks pushed us to think beyond what users are currently doing and imagining what they could be doing. And how libraries can best share those stories.

Lorcan Dempsey

Dempsey, Chief of Strategy for OCLC Research, provided the opening keynote for the meeting. He reminds us that libraries are not ends in themselves, but serve the research and learning needs of their users. The major long term influence on libraries is how those needs change. To be effective, libraries need to understand and respond to those changes.

- Increasingly, schools are defining their mission in particularly ways: land grant institution, career, research university

- They are moving from operating like a bureaucracy to one of entrepreneurship

- There is an increasing demand for analytics and assessment to demonstrate impact

As these aspects shift, how do we collaborate differently with the intuition, aligning the libraries vision and objectives to those of the organization?

Paul-Jervis Heath

Heath is not a librarian. His insights into user research come from the perspective of a user experience designer. He is a principal with the company Modern Human, a design practice and innovation consultancy. When librarians look at a service, we must ask,

- How do the parts of this experience relate to one another?

- What is the share of this thing?

- What is the value that this thing will deliver?

The basic expectation for experience is it be understandable, interesting and useful. Exceptional experiences, and Spotify was an example he used, are compelling and indispensable.

Joan Lippincott – Beyond Ethnography

Lippincott is Co-Director of the Coalition for Networked Information. She is well-known as an expert in learning spaces design. She asks us to think beyond what students are doing now, and think about what they could be doing in the future. Are we asking the right questions? She asks, if the user community don’t know what we are doing now, how can they tell us what the future should look like? And the recurrent theme, “Are we aligning our services, spaces, and technology with the university’s teaching & learning and research aspirations? She sums up: “Ask good questions that will yield meaningful, actionable responses.”

Stanley Wilder Mixed Methods and Mixed Impacts What’s Next for Library-based User Needs Research?

Wilder is the Dean of Libraries at Louisiana State University. He had the difficult task of speaking last when the crowd was over-saturated with information and ideas. He was up to the challenge. He spoke from the perspective of the library administrator, requesting that the user research function of the library consider more broadly the kind of data and mixed methods they can draw up. Card swipes, transaction data are examples. He asked us to “follow the money”. If half of our budget is spent on collections, let’s be sure to understand how those collections are used.

I would agree with his assertion that we don’t have the data points to tell the story we want to tell. If we continue to allow “print circulation to serve as a proxy as an indication of our usefulness, we will not survive the scrutiny of campus administrators.” On the other hand, if the library can serve as the “go to” place for our deep understanding of how faculty and students do their work, our essential role within the university is made all the stronger.

Planning Assessment within the Institutional Context

Last week I was privileged to conduct an assessment workshop with two gurus in the field: Danuta Nitecki, Dean of Libraries and Professor, College of Computing and Informatics at Drexel University and Robert E. Dugan, Dean of Libraries at the University of West Florida. They are authors, along with Peter Hernon, of one of my favorite guides, Viewing Library Metrics from Different Perspectives: Inputs, Outputs and Outcomes and have collaborated in other venues related to assessment in libraries and higher education.

We conducted a pre-conference workshop as part of Drexel’s 2015 Annual Conference on Teaching & Learning: Building Academic Innovation & Renewal.

Librarians and their Value to the Student Learning Experience was designed to bring together librarians and higher education professionals to experience planning an assessment project, identifying partnerships and resources across the campus for doing effective assessments of learning. The stated outcomes were to assist participants to be able to:

- Identify what librarians are typically doing

- Apply a method to gather data

- Build stronger campus collaborations and assessment strategies

Our participants came from a variety of institutions and roles: directors, institutional research, assessment librarians; from both public and private schools in the region (four states!).

The workshop started out with an introduction from Bob, who addressed the question of why we conduct assessments. He looks at this from his roles as a library dean who also serves as head of institutional research and assessment. We focus on assessment to be accountable to stakeholders (e.g. parents who ask, “Is my child getting a good education?”); compliance (accreditation), and assessment towards the continuous improvement of programs, activities and services.

Danuta provided participants with a framework for approaching assessment within the university/college context. The “logical structure” exercise had us break down an assessment need to three elements: articulation of assessment purpose, the evidence sought for making judgements, and selection of an appropriate method of gathering that evidence.

We brainstormed together about the myriads kinds of contributions librarians can make to the institutional assessment effort in the form of collections, services and facilities. For instance, we may have:

- learning outcomes data from our instruction program

- expenditures and other quantitative usage data on our collections

- guidance on copyright, academic integrity, research data management

- valuable, neutral space and technology for student and faculty work

The challenge for us as librarians is to think about how these data can be used in ways that connect up with the stated mission and values of the institution. How does our work contribute to student success? Their engagement with the school? How do we help faculty in their teaching and research? What contributions does the library make towards institutional reputation through programs and publication?

In the final segment of the workshop we talked about the practical considerations of planning for assessment. Our question has been defined, our data needs and sources identified. We’ve designed a appropriate method for gathering the evidence. In order to carry this out, we need to create a budget, identify staff and skills required, allocate staff time and a time frame for conducting the assessment. Whether our research is exempt from review or not, we’ll want to insure that we’ve connected with the Institutional Review Board.

Perhaps most importantly, our plan for presenting assessment results with stakeholders needs consideration. The communication may take a multiple forms, depending on its purpose and audience — a paragraph embedded in an accreditation report; a set of slides for a meeting with the provost; a 10-page report for library staff.

Based on evaluations, the workshop was a useful and thought-provoking one for participants. Thanks again to Danuta and Bob for their huge efforts on this project – I know that it’s got me thinking more broadly about our assessment work here at Temple.

Posted in organization culture and assessment

Tagged assessment of learning, conferences

Leave a comment

Drexel’s Service Quality Improvement Initiative Reaps Benefits for Users

John Wiggins, Director of Library Services and Quality, has been engaged with implementing service quality at Drexel University Libraries since 2012. This more systematic attention to quality improvement was initiated by the dean, Danuta Nitecki, and John has steadily built the effectiveness of these methods in the library environment.

His experience is a great example of how changing an organization’s culture takes time, engagement from all levels of staff, and although not always yielding the results we think we want, might yield more than we bargained for.

The Service Quality Initiative program was launched in January 2012 with a visit from Toni Olshen, business librarian at Peter F. Bronfman Business Library at York University’s Schulich School of Business. Olshen is an expert in service quality measurement and customer-centered activities. Through her formal presentation and informal coffee hours, Olshen introduced the concept of service gap analysis, where there is a potential gap between perceived and expected service.

Since the Service Quality Improvement launch, John has engaged staff in four formal SQ projects, looking at:

- Interlibrary loan

- Access to online databases provided by the libraries

- Self-service checkout

- Rush orders for reserves

The first service quality project looked at the interlibrary loan process – for all libraries an area of potential inefficiency and complexity, yet essential in providing research support for our students and faculty. A group of fifteen staff from throughout the Library looked for high impact, high return on investment changes.

In providing interlibrary loan service, gaps might exist between perceived and expected policies, process, external communications or services. For instance, if ILLiad status updates are not meaningful to patrons that is an external communication gap. These issues were examined to identify those that, if addressed, had the broadest impact and highest return on investment (or “bang for the buck”) were given priority.

As staff became more familiar with the process, the system that worked best for Drexel was codified. For instance, smaller teams, although those that included all experts in the process, worked better. A modified Lean approach is now in use, in which all steps in a process are carefully documented by the key participants. The process of ordering rush reserves, when fully described, involved 89 steps. These were itemized on a spreadsheet with the average time each step took (How we “think” the process works). In the final step, the process was actually conducted and timed. Not only are the staff able to identify ways to make this more efficient, but staff came to appreciate the complexity of what their colleagues were doing. By articulating and documenting the process, and by sharing with staff outside the process, new eyes several steps may be eliminated. Yeh Service Quality Improvement!

And kudos to John, who in his own efforts models continuous improvement in providing a better user experience – as he builds on his learning each time he undertakes a new service quality project!

Drexel’s Principles for Process Evaluation (based on Lean approach)

- Goal is to reduce customer time and increase convenience

- Secondary goal is to reduce costs to allow for reallocation of resources

- Staff involved in process are on the team

- Improvement team members contribute knowledge and ideas to team’s efforts

- Team will examine all aspects/angles of the process for potential improvements

- Improvements may range from and include tweaks of minor tasks through re-envisioning the process or the Library’s program to deliver the service

If you’d like to read more about the use of Lean in higher education and libraries:

Lean Methodology in Higher Education (MacQuarie University)

A Culture of Assessment: The Bryn Mawr Example

This month I had the pleasure of visiting Bryn Mawr College as part of a staff development event hosted by the Assessment Working Group for Library & Information Technology Services (LITS). The group is convened this year by Olivia Castello, who also serves as Outreach & Educational Technology Librarian. Olivia and her colleagues have presented results of their assessment projects at the Philadelphia Libraries Assessment Discussion group. I spoke with Olivia about how they are developing a culture of assessment at Bryn Mawr.

NT: How did the Assessment Working Group get started? How is it organized?

OC: The group was founded at the request of our new CIO and Director of Libraries, Gina Siesing. The purpose was to leverage the wide range of skills across the organization, approaching assessment in a coordinated way. The charge is to “help us understand the effectiveness of our current programs and the interests and goals of our community, and to inform LITS’s ability to plan program and service changes, continuously improve, and meet the College’s evolving needs.”

The committee members are selected based on their expression of interest and their job role. The position of convener rotates annually. The convener is responsible for moving the group forward, as well as practical things like scheduling meetings and planning agendas. The first thing I did as convener was have a one-on-one with Gina to get her sense of where the group should focus their energies, what her goals for assessment were.

The working group doesn’t actually do the assessments, but it promotes a culture of assessment. We may serve as consultants or help a project manager do assessment planning.

NT: In what other ways do you foster a culture of assessment?

OC: Assessment is a requirement within our project management process, an assessment plan needs to be in place for a project to be approved. A project could be anything that’s not an ongoing service (e.g. renovating a computer lab, piloting a new type of instruction). This assessment could be simple or complex. Putting the assessment piece up front for project approval was an opportunity for the group to promote the process of assessment – the importance of thinking about assessment in the beginning rather than after the fact. It will hopefully result in better, more systematic, assessment – a baby step towards using the data that we already collect

So far in 2015 we have done some initial brainstorming as the first phase of an assessment data audit. We also organized a staff development workshop for LITS (and Tri-College Library consortium) staff members interested in learning more about assessment. Within the working group, we have also devoted meeting time to reading/discussing scholarly literature on library and IT assessment, exploring what assessment means to different stakeholders in our organization, and brainstorming different ways of talking about assessment to different audiences.

NT: Can you tell me about a specific assessment project you’ve been involved with?

OC: LITS already does a lot of assessment and every part of the organization uses data to make decisions – one particular project that I have been involved with is space planning for Canaday Library (http://www.brynmawr.edu/library). Even though we haven’t yet received capital funds to renovate our main library – the staff who manage the building have been able to make some simple changes (e.g. furniture placement, adding a public question/comment and answer board, adjusting carrel policies, improving signage, etc.) based on the data collected as part of our participatory design research. More about that project: http://repository.brynmawr.edu/lib_pubs/14/

We would like to try to get more feedback from students even in small ways, like using short interviews with a paper prototype of our research guides.

NT: What are some challenges that you are facing as you develop a culture of assessment at Bryn Mawr?

OC: One challenge is that assessment data are collected in a variety of formats and frequencies, and possibly with different understandings and assumptions by different groups. It can be hard to know how or whether to merge similar data, for example. Staff may sometimes feel that they don’t have the expertise to design complicated assessment projects or analyze data. Also, people may look at assessment as something that takes extra time — time they don’t have to devote to it. We are trying to address this in the Working Group by sponsoring professional development and by serving as consultants in assessment planning.

NT: What do you see as some activities that you’ll be engaged with in the future?

OC: We will continue with our assessment data audit by conducting formal interviews with LITS staff about what data they are collecting, with what frequency, where it is reported, what decisions it influences, etc. Virginia Tech librarians used the Data Asset Framework developed at the University of Glasgow, or we may develop our own that’s not quite as detailed. Our ultimate goal is to facilitate some form of assessment data repository to help with internal decision making, and to aid in communicating LITS’ value to external stakeholders.

NT: If I were to come to you seeking advice on how to initiate an assessment program like you have here at Bryn Mawr, what would you tell me?

OC: Even if you do have a full-time assessment librarian, I recommend convening some sort of working group. Regular discussion with representatives from across an organization can help provide different perspectives and develop an understanding of what assessment practices and attitudes towards assessment are already in place at an institution. A listening tour, or formal set of interviews with co-workers across the organization, is a good way to get the “lay of the land”.

It is also important to have the buy-in and sponsorship of the CIO/Library Director or other organizational leadership. They can help to refine the group’s priorities and articulate how the organization’s assessment efforts will connect to larger institutional goals, as well as how they may be relevant to outside audiences.

NT: This is really good advice. These strategies for building assessment practice are key for both small and large libraries. Thanks, Olivia!

Improving the Discoverability of our Digital Collections

Doreva Belfiore, Digital Projects Librarian, invests much of her time improving access to digital collections and making these collections findable on the open web. I talked with her recently about how her work helps to enhance the discoverability of Temple’s digital collections.

NT: So what is the goal of what you are doing?

DB: Ideally, we would like the digital collections to reach the most number of people in the most unhindered way possible. Recognizing how much time and energy goes into the preservation, cataloging and digitization of these collections, it would be a shame to not make them findable as broadly as possible.

NT: Findable to whom and how?

DB: We currently use Google Analytics to gain insight into the traffic on our library web site. Google Analytics is an imperfect tool, but it can tell us how many people access the collections through open searches on the web. It can tell us whether they visited our collections based on open search in a search Engine (Google) or through a direct link from a blog or other site.

We can learn how users found our site (whether a search was used, whether they typed in a web address, or whether another site, like Wikipedia, referred them to us).

We can identify our most popular items, and if there is a specific interest in particular sets of materials. However, we have limited information on how exactly our users navigate through the site, or even who they are, (i.e. age or gender).

Advanced researchers may find our materials when the archival finding aids produced by SCRC are indexed by Google. Theoretically, we could look at these sources as referrers.

So with small, incremental changes we are able to increase discovery and quantify the effect of these changes through Google Analytics. Although, we’re not always sure what is attributable to the changes we have made. Increases in site visits could also be a natural spike due to publicity, the growing of collections, or the inclusion in 3rd party sites and portals such as the Digital Public Library of America.

NT: Other than analytics – are there things we could do to assess discoverability?

DB: Colleagues in public services and special collections report back to us how users find our materials, although this is anecdotal. They report that some students have trouble finding digital materials from within SUMMON. We don’t know if Dublin Core mappings effect SUMMON indexing, but we’re continually looking at how we get our local digital collections shown higher in the search results.

NT: Recognizing that this is an ongoing initiative, what are some next steps?

DB: Well, as I said, this is an iterative process. Another venue for discovery is Wikipedia. So providing opportunities for library staff to learn about editing Wikipedia would be a positive step. We recently participated in a Wikipedia “edit-a-thon” here in Philadelphia and interested librarians were encouraged to participate in that. Here at Temple we’re in the process of getting an interest group off the ground.

NT: Any final words?

DB: Sure. Improving discoverability of our digital collections involves coming at it from many angles where we would expect to see small results growing incrementally, improving:

- cataloging

- workflow

- discoverability

- organization

- communication

The important thing is to get different people together at the table, as discoverability requires skills from all over the library.

Report from ARCS: Perspectives on Alternative Assessment Metrics

This month I had the good fortune to attend the inaugural Advancing Research Communication and Scholarship meeting here in Philadelphia. The best panels brought together researchers, publishers, service providers and librarians with contrasting, yet complementary perspectives on how best to communicate and share scholarship.

As an assessment librarian looking at ways of demonstrating the impact of scholarship, I was particularly excited by the conversations surrounding “altmetrics” – alternative assessment metrics. Coined by Jason Priem in 2010, the term describes metrics that are not traditional citation counts or impact factors – altmetrics counts “attention” on social media like Twitter. Conference participants debated the limitations and value of these new measures for understanding impact as well as exploring their use for scholarly networking or tracking use of non-traditional research outputs like data sets and software.

Todd Carpenter (National Institute for Standards Organization) reported on the NISO initiative to draft standards for alternative metrics. Carpenter points out that altmetrics will not replace traditional methods for assessing impact, but he asks, “Would a researcher focus on only one data source or methodological approach?” In measuring impact of different formats, we need to insure that everyone is counting things in the same way. The initiatives first data gathering phase has resulted in a whitepaper synthesizing hundreds of stakeholder comments, and will suggest potential next steps in developing a more standardized approach to alternative measurements. For more information about the initiative and to review the whitepaper, visit the Project Site at: http://www.niso.org/topics/tl/altmetrics_initiative/

SUNY Stony Brook’s School of Medicine has been very aggressive in its approach to making researcher activity and impact visible. Andrew White (Associate CIO for Health Sciences, Senior Director for Research Computing) spoke about the School’s use of altmetrics in building a faculty “scorecard, ” standardized profiles showing education, publications and grants activity. White sees altmetrics as a way of contributing to these faculty profiles, providing evidence of impact in areas not anticipated, like citations in policy documents. Through altmetrics they’ve unearthed additional media coverage (perhaps in the popular press) as well as evidence for the global reach of their faculty and clinicians.

Stefano Tonzani (Manager of Open Access Business Development at John Wiley) provided us with a publisher’s perspective. Wiley has embedded altmetrics data into 1600 of their online journals.  This can be a marketing tool for editors, who “get to know their readership one by one” as well as attracting authors, who like to know who is reading, and tweeting about, their research. Tonzani suggests that authors use altmetrics to discover and network with researchers interested in their work, or more deeply understand how their paper influences the scientific community.

This can be a marketing tool for editors, who “get to know their readership one by one” as well as attracting authors, who like to know who is reading, and tweeting about, their research. Tonzani suggests that authors use altmetrics to discover and network with researchers interested in their work, or more deeply understand how their paper influences the scientific community.

On the other hand, according to the NISO research, only 5% of authors even know about altmetrics! As librarians, we have a good deal to do in educating faculty and students about developing and attending to their online presence towards broadening the reach of their scholarly communication.

Library Assessment Goings-on in the Neighborhood

Philadelphia-area librarians practicing assessment have a rich assessment resource here in our own backyard. The PLAD (Philadelphia Library Assessment Discussion) is a network of librarians who meet in person and virtually to connect with and learn from one another.

We met this month at Drexel’s Library Learning Terrace to share lightning (5 minute) presentations on a range of topics, from the using a rubric to assess learning spaces to conducting ethnographic research with students.

John Wiggins (Drexel) started us off with, Tuning our Ear to the Voice of the Customer. Drexel gathers data from students in a variety of ways, through a link on the library website to regular meetings with student leadership groups. A strong feedback loop lets the library know what students are happy, concerned or confused about – like why the hours of the library changed. And provides an opportunity for library staff to let students know why those decisions are made. (The hours change was based on transaction data).

Merrill Stein (Villanova) spoke about the regular surveys of faculty and students and the challenge of writing questions that are clearly understood. And while asking the same questions over time allows for trend analysis, sometimes we need to ditch the questions that just don’t work.

For a school with a small staff, Bryn Mawr librarians are very active with assessment. Melissa Cresswell spoke about using mixed methods (participatory design activities with students, photo diaries) to gather feedback on how library space is used. While they make changes to space incrementally, the rich qualitative data is used over and over and although the sample sizes are small, themes emerge. The overarching theme is that students want to be comfortable in the library.

Olivia Castello (Bryn Mawr) presented her research on the impact of the flipped classroom on library instruction. She wanted to know if this model, where students are provided with a tutorial as “homework” prior to their session at the library, had an impact on student success with an information literacy “quiz” after the session. While she’s found a correlation between the tutorial and student success, she’ll need a more controlled study to demonstrate real causation.

Danuta Nitecki (Drexel) introduced us to a rubric designed by the Learning Space Collaboratory for assessing learning spaces – We talked about what behaviors signify active learning and the kind of space and furniture best facilities this behavior

My own presentation was on cultivating a culture of assessment – how challenges can be turned into opportunities. I used this blog is an example of one opportunity – Assessment on the Ground serves as a vehicle for sharing best practices and generating a conversation about library assessment in all its forms.

Marc Meola (Community College of Philadelphia) introduced us to Opt Out, the national movement against standardize testing. He suggested that for instruction assessment, frequent low stakes testing may serve as a better method, where students and teachers see the results of tests and can learn from those.

ACRL’s Assessment in Action program has a growing presence in our community, as evidenced by two presentations related to that initiative. Caitlin Shanley (Temple Libraries’ own Instruction Librarian and Team Leader) spoke to the goals of the program as helping librarians to build relationships with external campus partners, and becoming part of a cohort of librarians practicing assessment. Elise Ferer’s (Drexel) proposes

to improve our understanding of how Drexel’s co-op experience relates to workplace information literacy. The proposal builds on the strong relationship between the Drexel’s Library and the career center.

The meeting also provided ample opportunity for small group conversations about the presentations. We discussed the iterative nature of assessment – from tweaking a survey from year-to-year to using mixed methods for a more robust picture of user experience. We are all challenged to design good surveys and have come to recognize the limitations, at times, of pre-packaged surveys for informing local questions. All of us struggle with the impact of increasing survey fatigue. Perhaps this is an opportunity to be more creative in how we do assessment. In generating and fostering that creativity, the PLAD group provides an excellent and fun way of supporting and learning from our colleagues.

Thanks to everyone who participated!