By Laura Biesiadecki

An Important Note on the Data Life Cycle…

Gathering and curating a batch of data can be an intricate and time-consuming task — as you develop your own digital project, think about how the collection can be preserved. If you plan on submitting this data to a repository, make sure to reach out to a librarian before you start! There are plenty of things to keep track of during the gathering process that will be immensely helpful when the time comes to share your data set.

Time to Tidy Up

Once your digital project has been outlined, and once you’ve established appropriate parameters for your corpus, it’s time to collect and clean workable versions of relevant titles. And whether you’re lounging in the ivy like the young woman above, trying to determine if the gentleman in the wide-brimmed hat is of marriageable stock, or working from home, you’re ready to dive in.

The process for gathering and editing your documents will depend on the resources you’re working with and where they come from. Thankfully, a majority of the titles on my list were found as both PDF and text-only files on HathiTrust, so I didn’t need to use an Optical Character Recognition (OCR) software to process the scanned pages. The titles I did need to convert were downloaded as PDFs from various library databases and processed using Adobe Acrobat’s OCR tool.

The Basics of Document Cleaning: Find and Replace

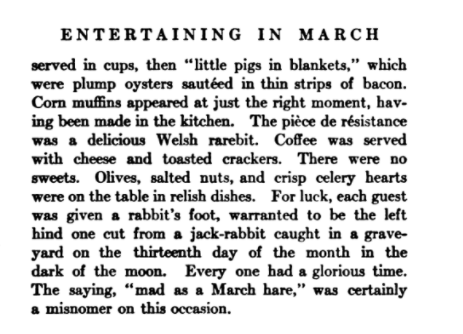

In each of the full-text versions of etiquette books I download, there are errors that need to be removed so future textual analysis can be as accurate as possible. Thankfully, most of the errors in these documents are obvious and repeating, making it easier and significantly less time-consuming to clean. We can look to Dame Curtsey’s Art of Entertaining for All Occasions (1918) for an example — I’m sure she’d be thrilled.

The format of the original printed page looked like this:

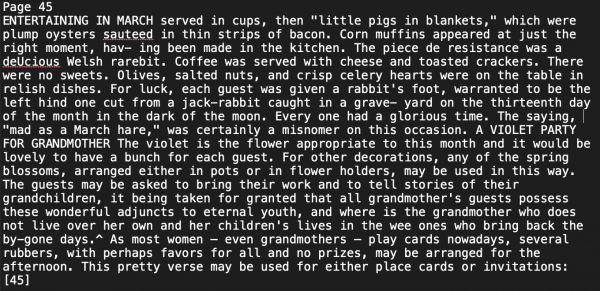

And the downloaded text file looked like this:

There are several things that need to be cut from this text, like the bold “Page 45” and the oddly spaced “E N T E R T A IN IN G IN M A R C H”. Since these headers appear on every page of the original document, they’ll need to be erased. A simple “find and replace” will be helpful, but more often than not these headers are complicated with different spacing patterns, misspellings, or punctuation. To be sure they’re all cut, scroll down and check the first text lines of each “page.”

It’s also important to watch for words that were split between two lines in the original document. In this selection, “having” on the third and fourth lines, and “graveyard” on the tenth and eleventh lines, appear in the text file as “hav- ing” and “grave- yard.” Inconvenient, yes, but not difficult to fix. It’s easy enough to find and replace all instances of “- ”, bringing those lonely word-halves back together again, and the very low-tech cleaning required for errors like these takes mere minutes.

Some Heavy-Duty Scouring

Some documents, due to formatting or quality of the original scan, are more difficult to manage. The formatting issues associated with my particular collection are fairly standard: almost every text is muddled by illustrations, hand-written letters, lists of necessary kitchen appliances, arranged in three columns, and oddly arranged recipes for anti-aging sheet masks (seriously!). Unfortunately, variations of these errors in image-to-text translation are more than likely to pop up, and will introduce unique roadblocks to your document cleaning process.

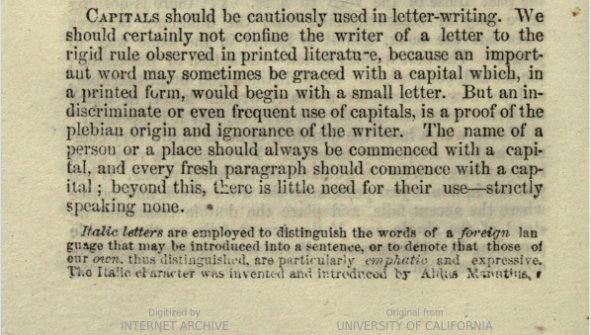

In his Chesterfield’s Art of Letter Writing Simplified (1857), Philip Dormer Chesterfield, Earl of Stanhope, uses footnotes at the bottom of each page to give his reader information about appropriate use of punctuation:

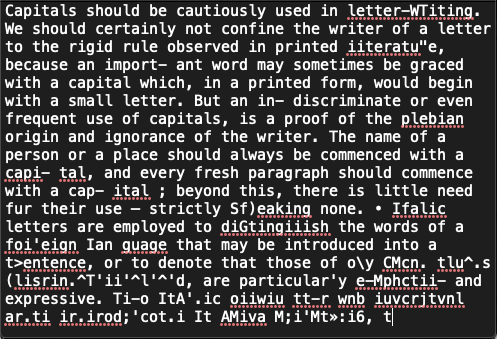

Unfortunately, the scanned version of this text isn’t quite able to handle the fine print:

We can see a few familiar and easy-to-clean errors (the “- ” in “capi- tal”), but the section turns to unintelligible mush after the standard-sized paragraph ends. I’m left wondering, What am I supposed to do with that?

When faced with more complicated errors, you have the option to clean or cut. Depending on the content of broken text — is this section necessary? does it contribute something relevant and new to the document? — and the frequency of this particular formatting issue, your decision will be made for you.

In the case of Chesterfield’s Art of Letter Writing, there are footnotes on a majority of the text’s 156 pages. It would take valuable time to go through the original scanned images, read each footnote, decide whether it was worth saving, and type the sections deemed relevant. I’d also be setting myself up for hours of future work, committing to the same intensive cleaning for the other 200+ titles in my data set. Further, these particular footnotes are not necessary to a project about general etiquette; letter writing was certainly important, but I have little use for musings on the proper use of italics.

While cleaning this, and at least a dozen other titles in my collection, supplementary content was cut in favor of efficiency.

A (Not Completely) Clean Sweep

The most important step in the document cleaning process is accepting that your final TXT products won’t be perfect. To make the most of your working hours, you’ll need to decide how many errors are acceptable and which sections of text you can afford to lose.

For this project, each of my cleaned text documents will have no more than 10 errors in the first 300 words, and all samples of letters or invitations will be cut, as will footnotes and advertisements for products or other books. For another project, these elements will be invaluable, and I’ll be taking note of which titles feature handwritten templates for wedding invitations. But for the purposes of my project, their content is not worth the time I’d have to spend cleaning it.

I’d be lying if I said the cleaning process wasn’t the most tedious part of my research so far, but a set of clean documents makes for more accurate data and more engaging analysis. In my next post I’ll discuss preliminary exploration with Voyant, and my experience learning (and writing!) code designed to analyze literature and non-fiction.