By Jeff Antsen

Intro

The field of Text Data Mining (TDM) offers tools for identifying interesting and meaningful themes in written language. Some of these themes might be accessible for the average human reader, but many features can only be sufficiently analyzed at scale through computational textual analysis. Contemporary law regarding intellectual property currently impedes research on copyrighted text, primarily because these works cannot be freely shared between researchers and or libraries/research centers in their human-readable, consumable or expressive form.

To address this problem, in collaboration with CLIR postdoc Alex Wermer-Colan, I have written a natural language processing pipeline using the R programming language. The code, entitled Extracted Features, is available on Temple Libraries’ Loretta C. Duckworth Scholars Studio’s Github page: https://github.com/TempleDSC/Extracted-Features.

The script converts texts from their human-readable form (e.g. the original text of a novel or essay) into a structured, ‘disaggregated’ file format primarily useful for quantitative analyses at scale. For certain computer-assisted TDM models (such as topic modeling), this disaggregated text is just as useful as the original, ordered version of the same text. This code offers an easy-to-use script for enabling the sharing of copyrighted texts as text files that can easily be uploaded to such tools as Voyant-Tools or the Topic Modeling Tool.

Extracting Features from Copyrighted Text Data

Sharing copyrighted works in their original, full-text, formatted, human-readable form is a violation of copyright law. However, the sharing of extracted features of texts protected in their normal form by copyright law is legal.

Many different properties of texts are considered to fall into the category of extracted features. A few examples of extracted features include:

- Information describing the original text’s syntax (parts of speech), semantics (such as named entities), or term frequencies. These extracted features can be used, for example, to identify authorship with stylometry.

- The results of TDM modeling; e.g. the topics discovered in a corpus (a group of texts) through topic modeling.

Of particular interest here is the simplest, namely, word frequencies. Original versions of works (containing all words in their original order) is protected by copyright law. However, that same text (or portions thereof) with word order completely changed is considered an extracted feature of that text, because it is no longer meaningfully consumable (human-readable). Matthew Sag’s recent “The New Legal Landscape for Text Mining and Machine Learning” (2019) provides the most thorough account of the legal precedent for sharing disaggregated, copyrighted data. HathiTrust’s Non-Consumptive Use Research Policy provides the legal precedent for such data curation. In collaboration with such scholars as Ted Underwood, HathiTrust has furthermore created tools for extracting certain features from copyrighted works, as well as for downloading large datasets of extracted features from their digital library.

This project offers an open-source script for those hoping to share copyrighted data. The closest comparable script we have found is available on Github, a Python script written by Jonathan Reeve called Chapterize. Building off this work, this R script goes one step further, disaggregating the data for efficient sharing of copyrighted data. This script has already enabled Temple Libraries’ Digital Scholarship Center to successfully share data from our growing corpus of “New Wave” science fiction novels with a researcher at the University of Kansas. She used these extracted features to perform topic modeling on Temple Libraries’ science fiction corpus, successfully defending her master’s thesis this Spring.

Why is disaggregated Text Data still useful for TDM Modeling?

Some TDM models rely on word order: for example, models that extract information about how words are used together, or how some words are contextualized by others to imply a positive or negative sentiment to a sentence. Disaggregated text data would be useless for these kinds of models, because the word order is one of the most important text feature being examined. However, many other TDM models ignore word order entirely, and instead focus only on comparing relative word frequency. These models identify patterns between the texts in a corpus. Such algorithms only care which words (and how many instances of each word) are in which bag (which text). The bags themselves can get shaken up in any way (the word order can be changed arbitrarily) and the results of a bag-of-words style model will be exactly the same – so long as the bag stays closed and the actual contents remain unchanged (as long as no words get added, removed, or altered during the shaking).

The most advanced form of text analysis that does not require the original text is arguably topic modeling, which treats each document as if it was its own randomly ordered “Bag of Words.” Topic models do exactly what a non-expert might presume; they identify a set of topics (think: themes, subjects, or issue-areas) which best characterize the differences between a group of texts. For example, imagine that you had 10 science fiction novels, but you didn’t know what they were about. You could use a topic model to determine how many broad ‘topics’ exist within this corpus. You might discover that among other topics, your corpus contains a lot of content about three sci-fi typical subjects: outer space, time-travel, and mutants. Further, the topic model would tell you which novels focus primarily on which of those topics, or if any focus evenly on two of those topics, or even all three at once (time-traveling mutants in space!)

Chunking, Sub-sectioning, and Disaggregating Corpora

Such TDM modeling as topic modeling still works for disaggregated texts, because disaggregating (re-ordering) the words leaves the actual contents of the text (the words themselves) — and therefore, their relative frequency — intact. For simplicity, I have chosen to alphabetize the words rather than just randomizing their order.

While both alphabetical and random ordering are equally useless to a human reader, the alphabetical ordering is slightly more helpful if a researcher at-a-glance wanted to see how many times a word (maybe, “spaceship”) appears in a novel. Also, since the number of instances of the word “spaceship” is maintained, a topic model can still discern which novels focus on outer space (those that use spaceship and other space-related words often) from novels that focus on other topics (and therefore rarely or never use outer space-related words).

Putting the words of a text into alphabetical order is itself a relatively easy task for a programming language like R, which contains many powerful tools and packages for working with text. This R script has a few added features which do more than just re-order the words of text(s). These additional features can be useful for research even when altering word order to enable the sharing of copyrighted texts is irrelevant, or not the primary objective. For instance, the code can break texts into sections. This is useful for modeling longer texts that use consistent sections (like chapters). Sectioning can be useful for better model results even in situations where word disaggregating is unimportant or irrelevant. Furthermore, the code can break sections down at a deeper level, chunking a text into sub-sections of approximately proportional length.

The most important reason for sub-sectioning is that the results of TDM modeling methods become less meaningful and less reliable when documents (texts or sections thereof) are of very different lengths. Interesting word patterns are captured less well in relatively longer documents when they are compared in the same model to relatively shorter documents. This means in a corpus where some novels have a few long chapters and others have many short ones, the chapter-level results are less meaningfully comparable than they would be for another corpus of novels where all chapters were of the same length. Therefore, giving a way to standardize text length is very important for methods of TDM where word frequency is of primary importance (again, the “bag of words” methods).

How does this R script operate?

The first parts of the Extracted Features script break each text file (for example, a novel) into several smaller sections, based on a preselected delimiter. The default setting for this code is optimized for use with texts downloadable from Project Gutenberg novels – a database of works of literature that are in the public domain. For these, a common delimiter is “CHAPTER”, in all capital letters.

Breaking novels into sections based on chapter is particularly useful for research on how the contents (such as topics) change from the beginning of a work to the end. To use the above example of a Sci-fi corpus with primary topics that include outer space, time-travel, and mutants, ‘chunking’ a novel into chapter-length sections would allow a topic model to identify that, chapter 1 is primarily about mutants, chapter 2 is primarily about time-travel, and chapter 3 is partially about mutants and partially about time-traveling (time-traveling mutants!).

However, just breaking texts into previously delimited sections still has limitations, and encoding texts can be time consuming. Therefore, we also give the option to break text sections into smaller sub-sections and to automatically chunk the text without a delimiter. Some texts don’t use chapters at all, others only separate their book into two or three extremely long chapters, and some contain a wide array of document lengths. Sub-sectioning helps give the researcher the ability to more meaningfully make comparisons between the works of heterogeneous corpuses.

How to use this R script and what to expect

To get started, download the code from Github, and place your .txt files in the text_data folder. Before running the code, you can alter three key parameters. For more detail on using this script, and adjusting these parameters to manipulate the code’s output, please refer to the README file on the GitHub page.

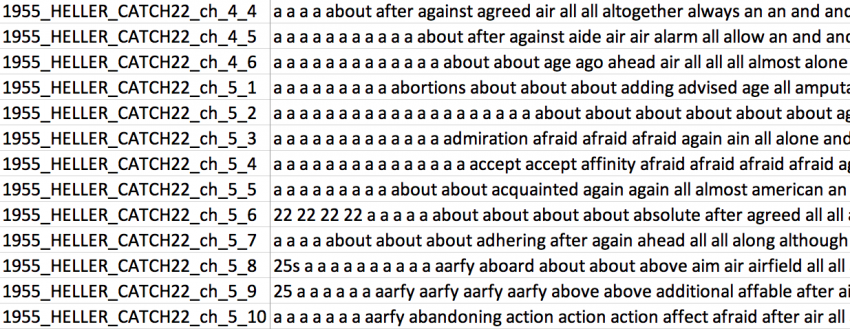

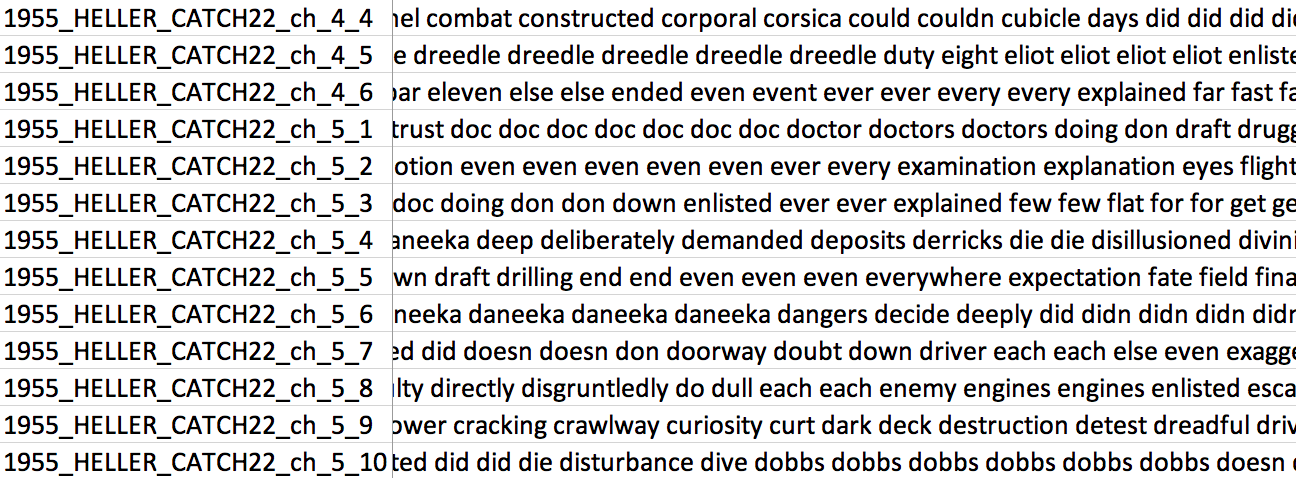

Below are two images of sample output, created from a corpus containing Heller’s copyrighted work Catch 22. Note that sections (chapters) have been sub-divided into subsections, and that words within these subsections are alphabetized.

Future Plans

This script is a work-in-progress, with intentions to develop further features for delimiting and chunking texts, and extracting more complex features. We’d be grateful if you could provide feedback and report issues on Github. Stay tuned in the coming year for further adjustments and options!