As regular readers of this blog know, I like to explore the use of generative AI as a collaborator in my assessment work – today I experimented with using ChatGPT to categorize a set of 100 open-ended responses providing feedback on our libraries’ public programs. The analysis includes raw textual data categorization, the production of a graphic, and a “chain of thought” exchange around how ChatGPT decided what responses belonged where. “Decided” may not be the most accurate word – it implies a consciousness that AI tools do not have. But still…

The feedback form is sent via email to every registrant to our programs – not all attendees register and the percentage of feedback to actual attendance is only about 5%. Of those, 48% are from community members, 15% from staff, 13% from alumni, 15% from students and 9% from faculty. It’s interesting to see that friends and community members provide the most feedback on our programs, although our attendance favors undergraduate students.

The identity question is a fixed option response and easily analyzed with Excel. The additional feedback we receive is free text, and that analysis is a little more complicated. We ask,

“How did you hear about the program?.”

This is where I called upon ChatGPT to assist:

Prompt: These are responses to a question about how people found out about a program. What are themes here?

Class

The Charles Library

Library Newsletter

From the artist

e-mail blast

Colleague

[edited]

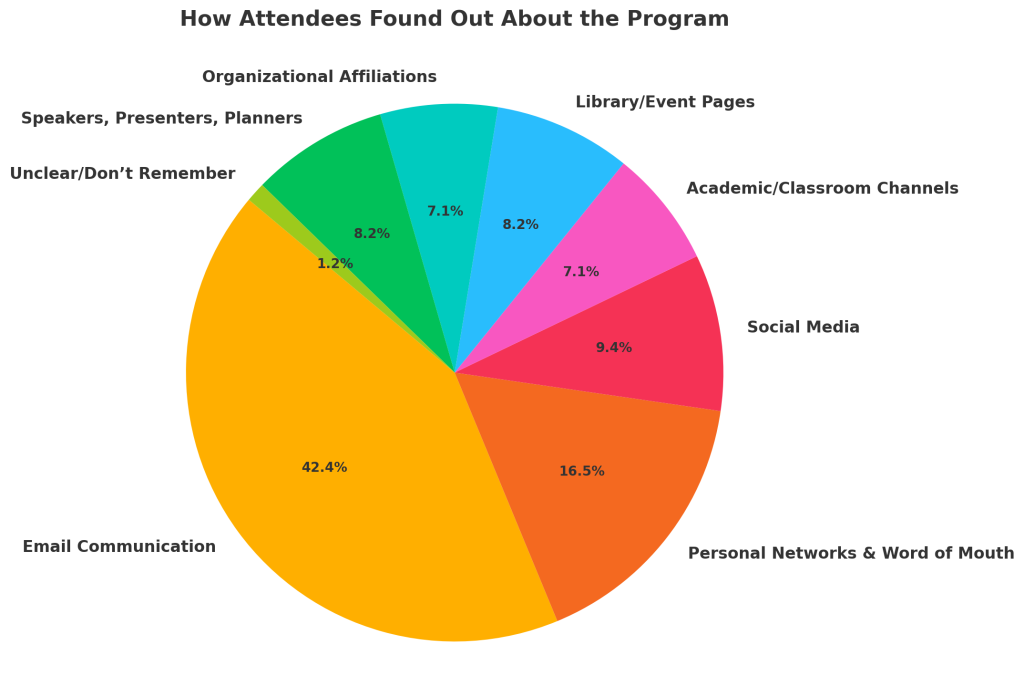

Within seconds ChatGPT categorized the 100 responses into categories with an offer to create a visual:

How do we evaluate this output? On the surface (and with a small amount of data) it looks good. And so fast! ChatGPT also shows us how it populated the categories, allowing us to assess the scheme. Digging into the biggest category, Email Communication (42.4%) ChatGPT explains:

1. Email Communication

This is by far the most prominent theme, appearing in many forms.

- General email: Email, Via Email, E-mail, e-mail blast, Email from Temple

- Specific sources: Temple Libraries email, Library email, Temple email, Becca’s email, Email from Charles L. Blockson Afro-American Collection staff

- Organizational lists: Temple List Serv, Library newsletter / AAR newsletter, OVPR, Forecast

To get additional clarification, I asked,

Explain why e-blast is categorized as Email Communication not Academic Communication. ChatGPT responds:

Great question! Here’s the reasoning behind categorizing e-blast under Email Communication rather than Academic/Classroom Communication:

Why “e-blast” is considered Email Communication:

- Definition of an e-blast: An “e-blast” typically refers to a mass email sent out to a broad audience, often through mailing list software or institutional communication tools.

- Primary characteristic: The defining feature of an e-blast is its delivery method—email—not the content or sender’s academic affiliation.

- Content-neutral: While it may be sent by an academic department or contain academic information, the categorization is based on how people received the information, not who authored it.

- Consistency: Other responses like “Email from Temple,” “Temple Libraries email,” or “Library Newsletter” also fall under this category, regardless of their academic origin, because they share the same communication channel.

How Academic Communication differs:

“Academic/Classroom Channels” refers more narrowly to:

- Learning environments (e.g., in-class announcements)

- Direct teacher or instructor communication (e.g., “Voice teacher,” “Professor,” “Class”)

- Departmental word-of-mouth or instruction-related messages, where the context is a classroom or academic engagement—not generalized outreach

So, even if a professor sends out an e-blast, it still fits best under Email Communication because it functions more as broad outreach than individual academic instruction or class-based communication.

Would you like a brief footnote or caption explaining this added to your chart or presentation slide?

I was impressed with this output on several fronts. If I were to categorize these responses myself, it would have taken up quite some time. I would probably not be able to so clearly articulate my reasoning as to what fit where. The distinction ChatGPT makes between Email Communication and Academic Communication – based on the how it is received, seems rather nuanced to me, and not a division I would necessarily have made. Understanding this may lead to improved branding of our communication and outreach. Perhaps our strategies in reaching our different communities should be evaluated. And here we have evidence of personal networks in effectively getting the word out.

ChatGPT never has the last word. There is opportunity for refining the prompts and extending the dialog, and the request to explain through “chain of thought reasoning” is informative. But there are takeaways from this modest example. The analysis starts a conversation with colleagues around these data, what they might mean, and potential actions we might take to respond. The exercise is also a learning experience around AI tools and their increasing sophistication to analyze text, calculate numbers and visualize the results in powerfully convincing ways.