In my capacity as coordinator of library assessment, I’m often consulted about survey design. I even represent the Libraries on the University’s Survey Coordinating Committee. So I should know and use best practices.

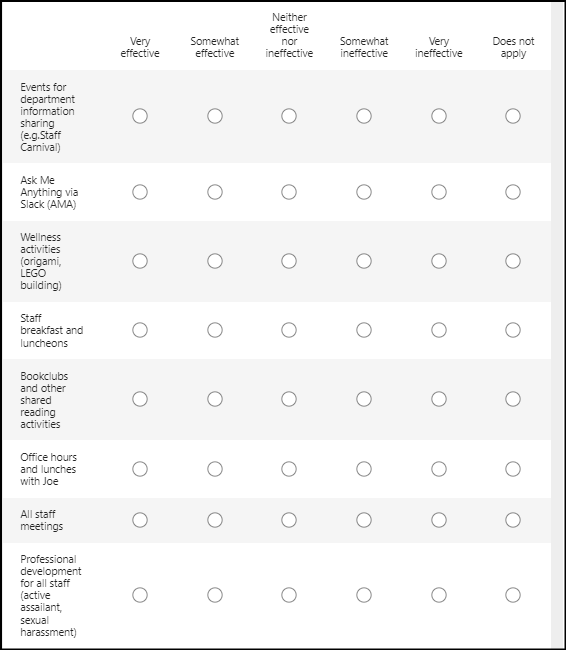

But frequently we want to toss out a quick survey to gauge reactions to a situation – surveys are generally considered a straightforward and suitable method for this. I wanted to gather feedback from library and press staff on the perceived effectiveness of activities put into place to build staff engagement. The survey followed was our staff engagement event (retreat) conducted in December. Six months post event seemed like a good time to conduct a brief assessment using examples of staff activities taken directly from the report’s recommended next steps.

It was a short survey. The first question,

On a scale of 1-5, how effective is this activity in helping you connect to others in the organization?

A minor mis-step.

The question references a scale of 1 to 5, but the options were not numbered! I doubt that this confused too many, but it was, admittedly, a bit sloppy on my part.

But the second question did cause some confusion. Using the same set of activities, the survey asked,

On a scale of 1-5, how effective is this activity for communication and building trust?

This is an example of a double-barreled question. A common survey question mistake, asking about two things at once. Communication is not always the same as building trust. When a survey question is not clear and precise, responders are justly confused, and the data collected is difficult to analyze. Because the question is not understood in the way it was intended.

Fortunately, the stakes here were not too high. The survey yielded important feedback and suggestions for improvement. But for those large-scale efforts, the University Survey Coordinating Committee, and the Office of Institutional Research & Assessment, serve an essential purpose. This office protects Temple students and faculty from being over-surveyed. Additionally, its review process puts lots of expert eyes on surveys that go out to our community. Ultimately, our committee work supports better response rates as it prevents survey-fatigue, and the careful review helps ensure the survey data we do collect is sound.

And of course, it’s a lesson for me and other assessment professionals — to always know there is room for improvement!