By Ping Feng

The recent news regarding how Oculus Rift could mimic our facial expressions and transfer them into our virtual avatar has once again expanded our imagination on how far human-computer interaction could go. How could machines understand humans better so as to interact with humans in a more intelligent way?

Facebook’s Oculus division has teamed up with researchers at University of Southern California to work on the modification of Oculus Rift and make it capable of tracking user’s facial expressions and translating them into a virtual avatar. How could they do this? First, they inserted strain gauges inside the Rift’s foam padding to monitor the movements of the upper part of the person’s face; and then, in order to capture facial movement for the lower half of the face, they attached a Real Sense 3D Camera bolted to the front of an Oculus Rift DK2. As you can see in the video, a virtual avatar successfully mimics the expression of the testers.

What does this imply? Is it merely for fun? What implication does this mimicking avatar have for video gaming or social interactions in virtual reality spaces? The potential goal for human computer interaction is to make virtual communication or interaction closely resemble human face-to-face interaction. Apparently, instead of using already-created characters and pre-conceived conversation options during gameplay, players could use their mimicking avatars to convey real-time emotions and interact with other players, thus making gameplay communication more vivid and realistic. But more importantly, another meaningful application is that players’ facial expressions could be recorded and further transcoded into players’ emotion data for later research and studies. For instance, at different stages of the game, players’ excitement or negative emotions data could be collected through avatars’ facial expressions, and these data and emotion variations could be further studied and analyzed to modify games and finally bring players better gaming experiences.

However, if the above mimicking avatar shows facial expressions and emotions as one dimension of physiological data that could be recorded and integrated into Oculus Rift, what about the other ones? Is there any other type of psycho-physiological data that could also be integrated into Oculus Rift?

Only using our brains without any muscle work to control or manipulate the outside world seems to be a scenario that we can only imagine. However, through the integration with EEG equipment, not only the basic movements in VR games could be controlled by players’ brain without even moving their muscles, but their brain activities could also be observed and recorded.

Brain computer interface (BCI) technology potentially opens the door to allow our brains to influence the outside world without the use of muscles. It is “a communication systems that can help users interact with the outside environment by translating brain signal into machine commands. The use of electroencephalographic (EEG) signals has become the most common approach for a BCI because of their usability and strong reliability” (Liao, et al., 2012).

Chris Zaharia has created a really interesting project that integrates Emotiv EPOC EEG equipment with the Oculus Rift 3D headset along with Razer Hydra gaming controller to track control the movement of avatars in virtual environments. Though the gameplay still requires hand controllers a little bit, the mind control in moving objects in virtual worlds has already been realized.

https://www.youtube.com/watch?v=yJBB5rxs-8E

Another interesting project is Throw Trucks With Your Mind, which is posted on Kickstarter for crowd-funding. Just like the film “Snakes on Plane”, throwing trucks with your mind is exactly what you can do now in thid VR game: by wearing a NeuroSky headset which translates the brain’s electrical signals into commands for a multiplayer telekinetic battle, the players can either lift a light barrel, or stand on top of a heavy vehicle, and levitate it off the ground, depending on their concentration level.

EEG equipment companies themselves have also started to develop some basic mind control video games by training with their wearable headset on machine learning system. Typical representative companies are Emotiv, Nuroskye, and Muse.

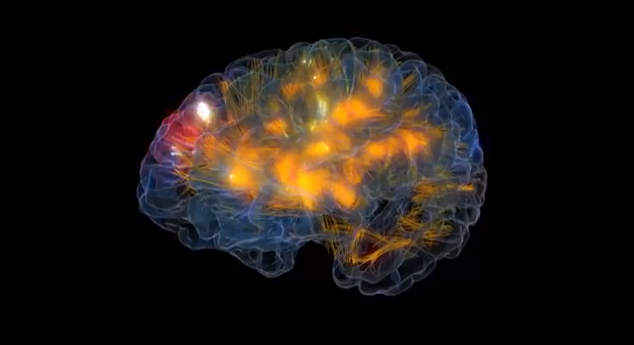

However, in addition to developing mind control games, there seems to be far more potential for the marriage of the two technologies–Oculus Rift and EEG. Observing human brain activity and transcoding them into meaningful data such as attention, relaxation, and cognition seem to be far more exciting and have more implications for future human computer interaction research. A group of scientists at UCSD and UCSF have collaborated with video game developers to create a platform that can show our brain’s reaction to media stimuli in real time. The project, called Glass Brain, was unveiled by Second Life creator Philip Rosedale and a team of researchers at this year’s South by South West (SXSW) festival in Austin, Texas. The system uses MRI scans and EEE electrodes to record brain activity, which can be observed by a third party through the Oculus Rift.

How far can the brain-comupter interface go? There are certainly more progress to expect in future. We are looking forward to the marriage of Oculus Rift and EEG and the unveiling of the mystery of brain activities as well as the influence on virtual gaming.

Thanks to my father who stated to me regarding this weblog,

this web site is genuinely remarkable.

Thanks to the great search engine who helped your father find out this weblog!!