By Alex Wermer-Colan

For the 2017-2018 Digital Scholarship Center (DSC) annual project, we teamed up with Temple University Libraries’ Special Collections Research Center (SCRC) and Digital Library Initiatives (DLI) to build a digitized corpus of copyrighted science fiction literature. By continuing the DSC’s corpus building project, this in-house resource serves to offer students and faculty more opportunities for “non-consumptive” research and pedagogy in the intersecting fields of cultural study, especially genre studies, and computational textual analysis.

Digitizing the Twentieth-Century Canonical Novel

Last Spring, 2017, the Digital Scholarship Center, under the direction of Prof. Peter Logan and Matt Shoemaker, conducted with DLI a digitization process of copyrighted twentieth-century novels. Peter Logan selected the novels by querying English faculty and graduate students as to which copyrighted works they used most frequently for teaching and research. The first batch of texts for the DSC’s corpus, then, reflects rather closely what we could call the contemporary canon, containing works by a wide-range of authors, from Maxine Hong Kingston and James Baldwin to Vladimir Nabokov and Zadie Smith.

After Temple Libraries purchased editions of approximately one hundred novels, a student worker in Digital Library Initiatives, under the direction of Delphine Khanna, Gabe Galson and Michael Carroll, proceeded to break the book bindings (using the aptly named guillotine) and send the hundreds of paper-sheets through a Fujitsu fi-7460 sheet-feed scanner. These newly digitized texts are currently being checked for errors by DSC graduate student workers, including Emily Cornuet and Crystal Tatis. To facilitate that rather painstaking work, Crystal recently began working directly with ABBYY FineReader to correct the scans, allowing for easier proofreading and the output of corrected text in a range of file formats, from .html to .txt.

As the DSC seeks to grow its corpus of copyrighted texts, besides downloading available texts from resources such as Project Gutenberg, we are seeking out more affordable ways to digitize copyrighted literature, ideally building searchable databases of fields of literature not yet readily available for computational text analysis.

Sifting through the Paskow Science Fiction Collection

Besides its voluminous Urban Archives, the SCRC also houses a significant collection of science-fiction literature. The Paskow Science Fiction Collection was originally established in 1972, when Temple acquired 5,000 science fiction paperbacks from a Temple alumnus, the late David C. Paskow. Subsequent donations, including troves of fanzines and the papers of such sci-fi writers as John Varley and Stanley G. Weinbaum, expanded the collection over the last few decades, both in size and in the range of genres. SCRC staff and undergraduate student workers recently performed the usual comparison of gift titles against cataloged books, removing science fiction items that were exact duplicates of existing holdings. A refocusing of the SCRC’s collection development policy for science fiction de-emphasized fantasy and horror titles, so some titles in those genres were removed as well.

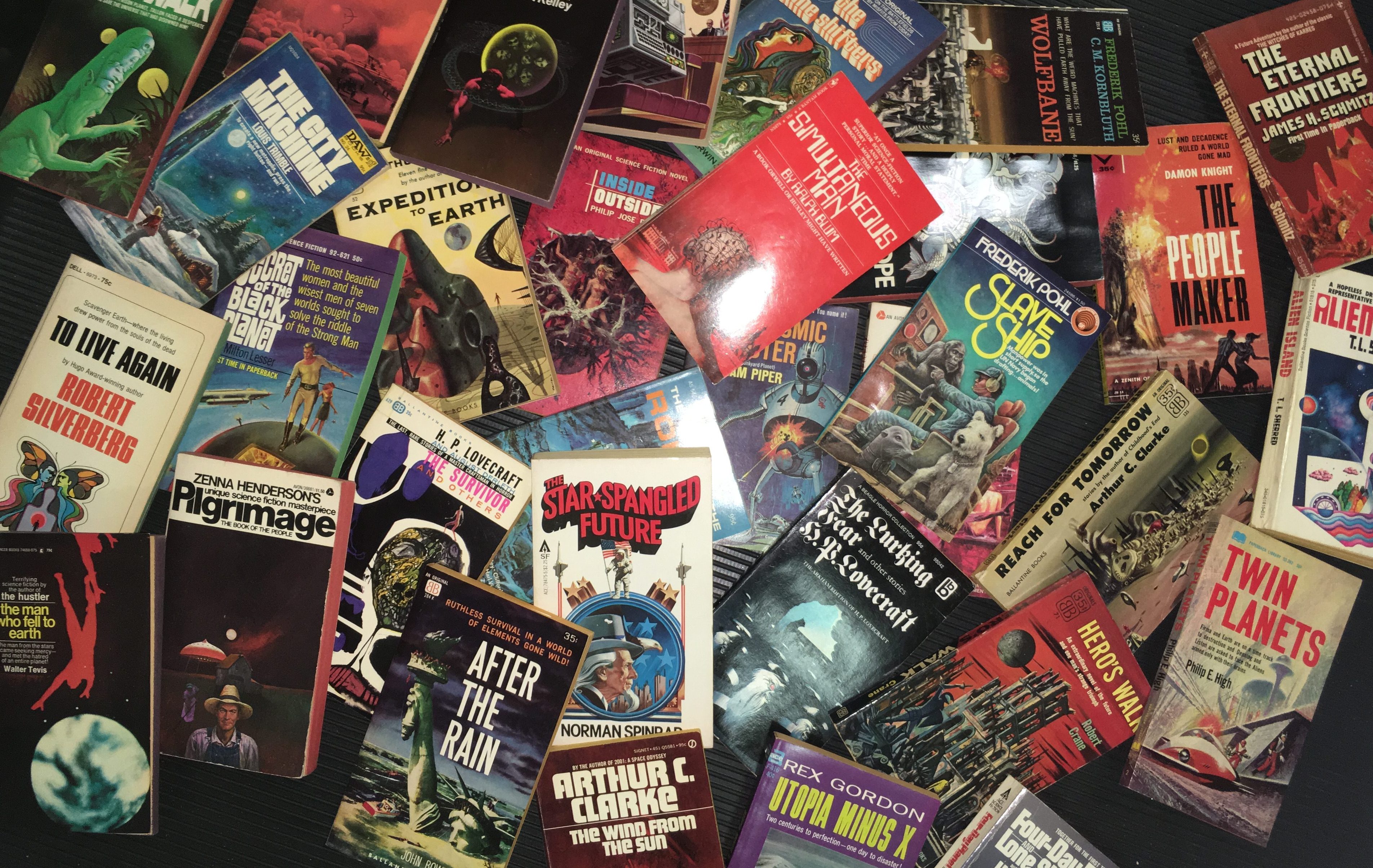

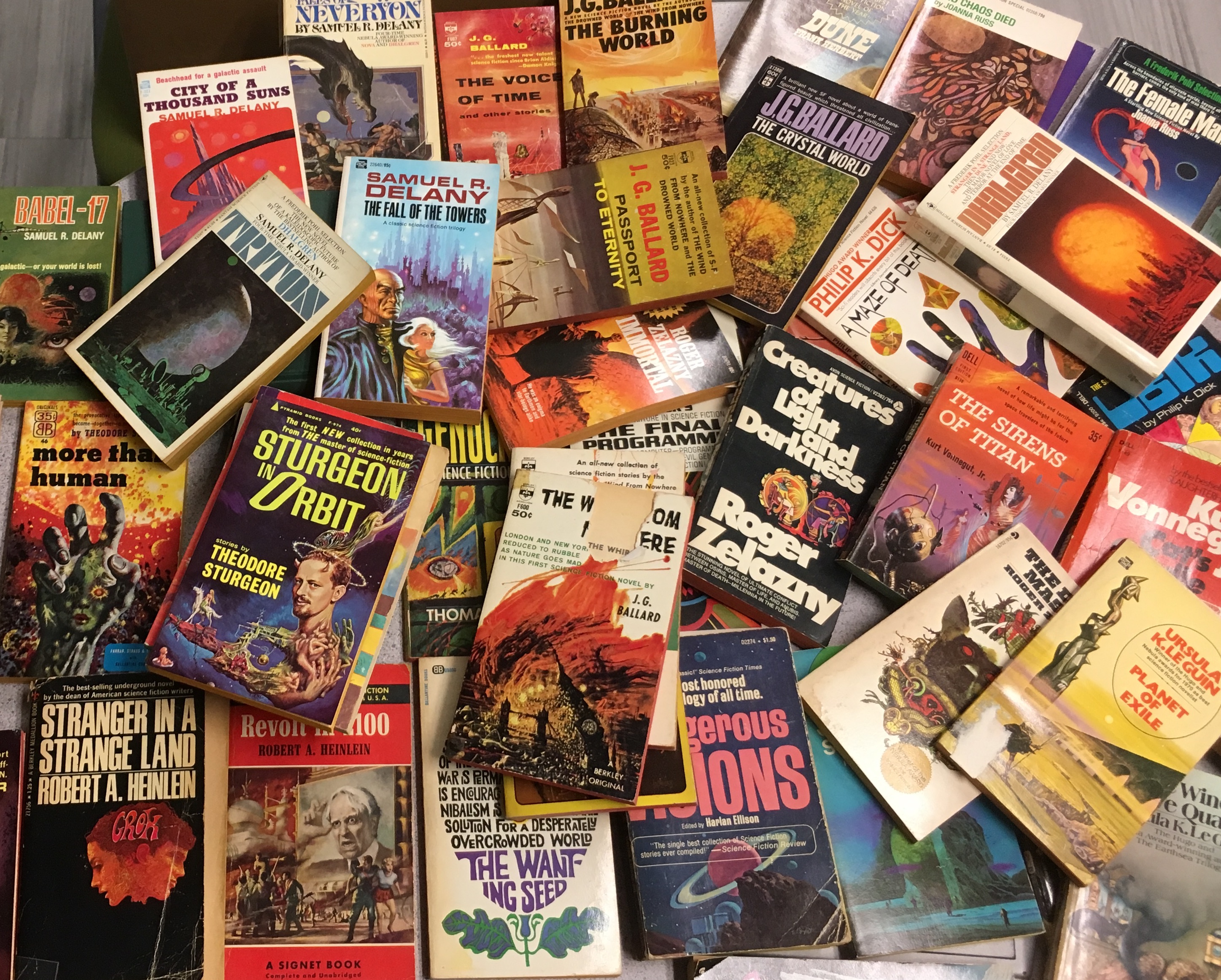

When I started working in the DSC this Fall, SCRC Director Margery Sly kindly gave me a tour of the collection and permission to sort through the hundred-linear foot set of duplicate books. Thanks to the assistance of Crystal Tatis, Jasmine Clark, Luling Huang, and James Kopziewski, we were able to filter through the materials in a matter of months. With that said, a lot of labor can go into corpus building. In this case, without an inventory of the duplicate books, we were forced to filter back and forth through dozens of boxes, selecting various options before we knew what else we would find. While parsing out romance, horror, thriller, young/adult, and fantasy genres, it quickly became apparent that the appeal of many mass-market paperbacks from the 1960s and 70s lay in their cover images and outlandish premises.

Many of these relatively unknown sci-fi novels advertised sensational allegories of alternate American histories and dystopian ecological futures that still resonate in haunting ways with our time. Consider, for instance, such promising titles as The Indians Won (1970), The Day They H-Bombed Los Angeles (1961), The Texas-Israeli War: 1999 (1974), and The Day The Oceans Overflowed (1964). While we did keep many of these gems, we also started to find classic works of that nebulous and contested category of literary style and period, the New Wave of science fiction, stories and novels written approximately from the late 1950s through the mid-70s by such authors as Samuel R. Delany, Joanna Russ, Philip K. Dick, Roger Zelazny, and Ursula Le Guin. It wasn’t until we’d worked our way through more than three quarters of the hundred linear feet that we found Afrofuturist works by Octavia Butler.

These were the writers I had hoped to find, the speculative writers of sci-fi who, since the onset of the 1960s, have imagined, rather accurately, many of our era’s most pressing crises. In The Drowned World (1962), for instance, J.G. Ballard portrays a time when solar radiation has melted the polar ice caps, transforming the cities of Europe and America into islands amidst beautiful, decaying lagoons. While Ursula Le Guin’s The Left Hand of Darkness (1969) foreshadows a rather recognizable totalitarian society, Samuel Delany’s Dhalgren (1975) offers a startling, complex vision of a post-apocalyptic American city. Many of the works we selected also fit well with the aesthetics and thematics of a Digital Scholarship Center, including such proto-cyberpunk texts as Jean Mark Gavron’s Algorithm (1978), and the classic anthology, Mirrorshades (1986). Finally, since we wanted texts that would prove interesting for computational textual analysis, the New Wave, with its complex experiments in literary forms, promises to be a particularly useful and timely resource for research.

Digitizing the New Wave of Sci-Fi Literature

With a workflow system already developed, I began this Fall working with DLI’s Gabe Galson and Michael Carroll to initiate this next round of digitization. Michael built an inventory and directed undergraduate workers to begin scanning the materials a couple months ago. As of this week, the last days of the Fall semester, Michael has informed me that DLI has already scanned over 40 volumes, almost one-third of the sci-fi selection. Compared to recently purchased and published classics of the twentieth century, these sci-fi works vary greatly in font and formatting, and the condition of many pages are so dirty that the scanner requires frequent cleaning. It is possible the process of cleaning the texts will also therefore be more onerous.

Hopefully by late Spring, on top of the DSC’s twentieth-century novel corpus and the voluminous materials available for download from Project Gutenberg, the DSC will be able to offer a digitized corpus of New Wave science fiction for those looking to analyze on a large-scale some of the literature most reflective of our seemingly dystopian future. In the meantime, keep on the look-out in the new year for more posts to the DSC blog on the sci-fi corpus, where I’ll try to explore the existing academic scholarship involving distant reading (large-scale textual analysis) of sci-fi lit and I’ll give samples of various research projects that could be conducted with our corpus.

Thanks again to everyone from DLI, SCRC, and in the DSC for their contributions to this year’s DSC project. Over the coming years, the digitized corpus will hopefully continue to grow, as this collaborative venture continues to digitize books not otherwise available for computational textual analysis. For a sneak peek, here is one possible selection of mass-market sci-fi books we could send off to the scanners in the future: