By Beth Seltzer

For my summer project in Temple’s Digital Scholarship Center, I wanted to use textual analysis to learn more about Victorian detective fiction. Scholarship has only touched on a small percentage of Victorian detective authors–Sir Arthur Conan Doyle of Sherlock Holmes fame, Wilkie Collins’s sensation novels, and a handful of others. Yet there were hundreds of short stories and novels from the time period which are increasingly digitized and freely available online. Since my dissertation research is on the genre of detective fiction, I’m hoping that computer-assisted textual analysis will help me get a fuller sense of the genre.

Sometimes you’ll find a corpus ready-made for you. In my case, it’s going to be tricky just to identify novels and stories from 1830-1900 as detective fiction, much less track them down. I’m dealing with a budding genre and very obscure texts. So I’m going to be relying a lot on these highly-digital tools:

Since I’m not going to read all the Victorian novels that exist (around 400,000 by one estimate), I need to rely as much as I can on work done by human eyes to figure out which books are actually “detective fiction.”

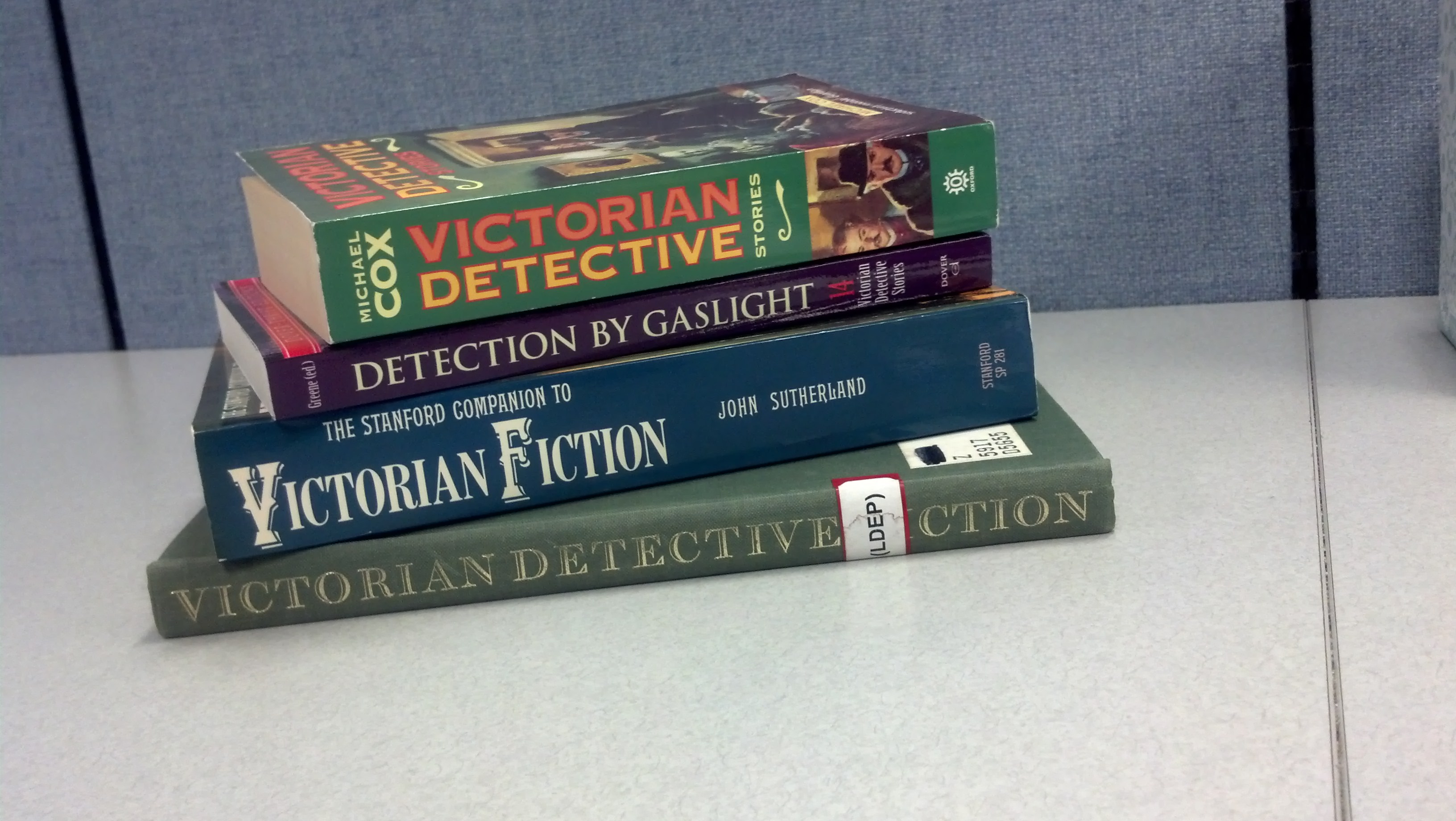

The most useful book for me is Graham Greene and Dorothy Glover’s 1966 catalogue, Victorian Detective Fiction, which I got from Temple’s library depository. This catalogue lists 471 novels, all vetted as fitting into the loose, Victorian definition of the genre—a novel mostly about detection, containing a detective. It’s important to keep this contemporary definition in mind, since we wouldn’t necessarily call many of these detective novels today. (If you’re used to Agatha Christie’s gang of suspects, it may be a surprise to start reading one of these earlier novels and find only one obvious criminal, for instance.)

My first big task is to look through this catalogue, and some other books I’ve got on hand, and try to track down as many of these texts as I can.

A lot of digital textual analysis projects require clean, plain-text versions of the book, so my first stop is Project Gutenberg.

Gutenberg texts aren’t perfect, but they were all corrected by hand, so they’re among the cleanest copies out there for digital projects. Other good sources for “clean” digital books include Eighteenth-Century Collections Online, Women Writer’s Project, and JSTOR Data for Research.

However, Gutenberg has a comparatively small collection of 45,000 books, and they only have around 13% of the books I look for. So if I can’t find a book, I search for it in the Internet Archive, an open library of about 6 million texts.

Internet Archive easily lets you convert a book into plain-text. Unfortunately, they give you rough, unedited OCR, so it’s going to need more work to get it clean enough to use. Look at this fun sort of stuff you find at the beginning of most of these books:

m

:M.^^&M^i^f,

wmmm

^-»>4#^^’.SL^gJH’

I find about 18% of what I searched for in the Internet Archive.

If I still can’t find a book, then I move on to sites that only have PDFs, like the HathiTrust and Google Books. These sites have the most texts to work with, but they’ll be more time-consuming to process, since in most cases I’ll need to run them through OCR software like Adobe Acrobat Pro or ABBYY FineReader to get them into the text format I need. I find about 9% of what I search for here that I can’t get in any other form.

But wait, you’re saying, you’ve only found about 40% of what you looked for! And the rest, all of those texts which are still marked with yellow sticky-tabs?

Some of these books have never been digitized, some might be available on other databases I haven’t tried yet, and some I just don’t have access to. For now, I’ll keep searching.