The R shiny package is impressive, it gives you the power of R, plus any number of packages, and in combination with your data allows you to create a personalized web application without having to know any JavaScript. There are endless possibilities of display options, add-on widgets, and visualization possibilities. While working on another project I ran into a really simple problem that took way too long solve. I watched innumerable tutorials and read up on the documentation, but for some reason I could not get an input selector to display reactive data based on a previously selected input. The ability to narrow down an input is something that is encountered on websites daily when entering address fields- Enter Country; which drives the next pull-down menu to offer up a list of States, but it took a while to make it for me to get to work in a Shiny app.

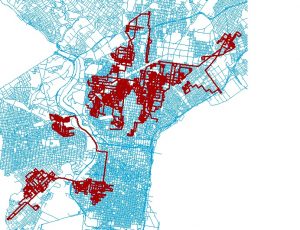

In order to make someone’s life a bit easier here is an example that I cobbled together that offers up county names based on the State selected, here for brevity’s sake the example uses a table created in the R code that only includes Delaware and Rhode Island- no extra data is needed to be downloaded. As a bonus I added a plot output using the “maps” package to highlight the selected county. There is code to install the “map” package, the assumption is being made that if you’re this far the “shiny” package is already installed and you are doing all of this through RStudio.

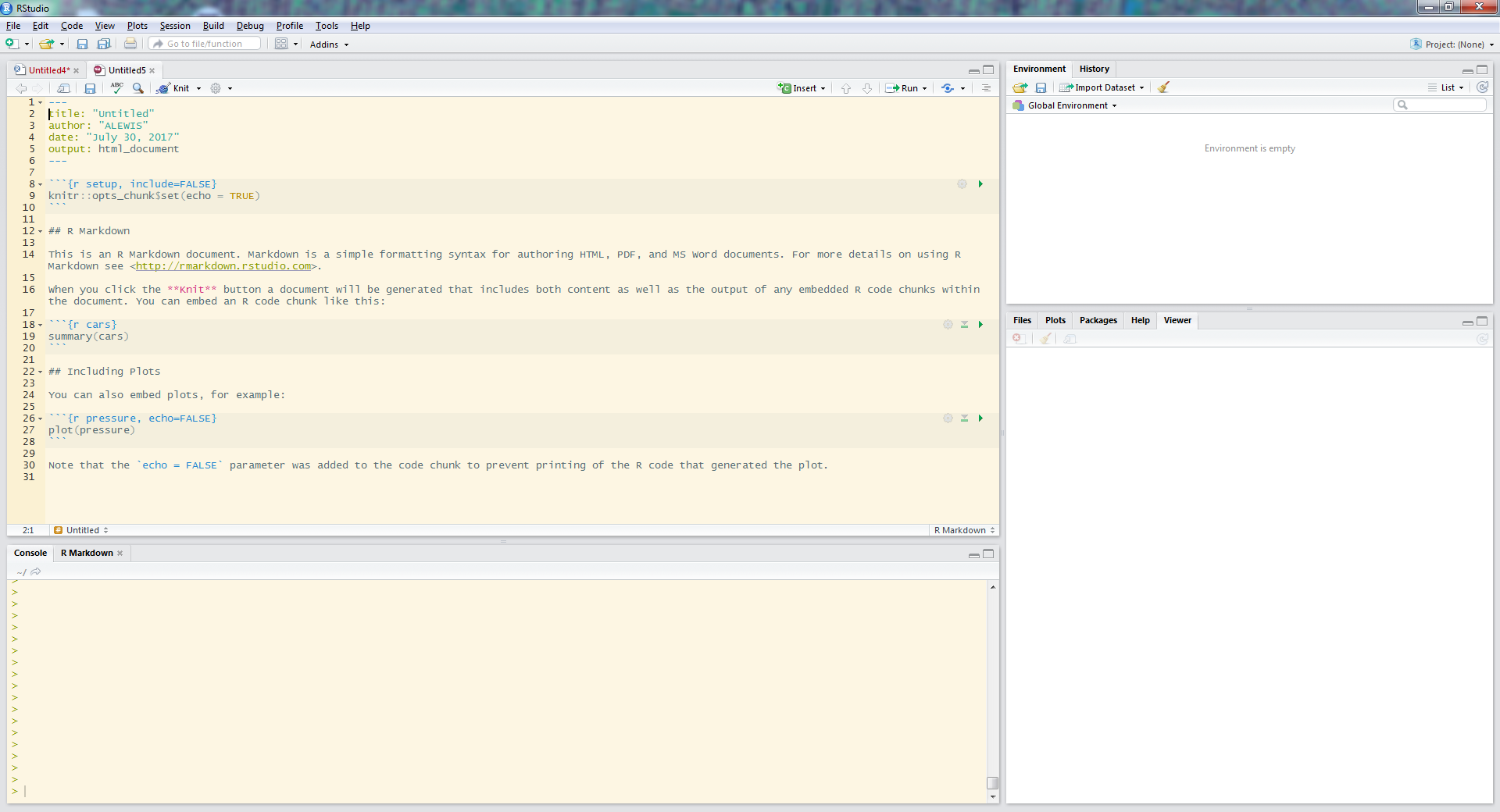

In RStudio paste the following code into a new file and name that file app.R so that RStudio recognizes it as a shiny app, as a best practice save it in a new folder that does not have any other files in it. Once saved as app.R the “Run” button at the top of the console should now say “Run App”. Click the “Run App” button and the app should load in a new window.

Breakdown of the file:

Section 1: runs once before the app is created and establishes the data in the app- could use this to upload a file, but in this example the datatable is created here.

Section 2: User interface(UI): this section sets up the appearance of the app, in this example the most important part was calling the input selectboxes using the “htmlOutput()” call that grabs information from the next section.

Section 3: Server: The “output$state_selector” uses the “renderUI()” call to utilize the “selectInput()” parameters set up the appearance and data in the state select input box of the UI. The second similar call “output$county_selector” uses the data from the state_selector call to filter the datatable and then this filtered data (now named “data_available”) is called by the second selectInput() command. Notice that each of the selectInput calls are wrapped in their own renderUI call. The last bit“output$plot1”, uses the info from the previous calls to display a map highlighting the selected county using the “renderPlot() call.

Section 4: make sure this is the last line of code in your file.

The following code is extensively commented, and should allow you to reuse as needed.

#install.packages( "maps", dependencies = TRUE) #run this to install R package maps

################################- warning this will update existing packages if already installed

#*save the following code in a file named app.R *

library(shiny)

library(maps)

##Section 1 ____________________________________________________

#load your data or create a data table as follows:

countyData = read.table(

text = "State County

Delaware Kent

Delaware 'New Castle'

Delaware Sussex

'Rhode Island' Bristol

'Rhode Island' Kent

'Rhode Island' Newport

'Rhode Island' Providence

'Rhode Island' Washington",

header = TRUE, stringsAsFactors = FALSE)

##Section 2 ____________________________________________________

#set up the user interface

ui = shinyUI(

fluidPage( #allows layout to fill browser window

titlePanel("Reactive select input boxes"),

#adds a title to page and browser tab

#-use "title = 'tab name'" to name browser tab

sidebarPanel( #designates location of following items

htmlOutput("state_selector"),#add selectinput boxs

htmlOutput("county_selector")# from objects created in server

),

mainPanel(

plotOutput("plot1") #put plot item in main area

)

) )

##Section 3 ____________________________________________________

#server controls what is displayed by the user interface

server = shinyServer(function(input, output) {

#creates logic behind ui outputs ** pay attention to letter case in names

output$state_selector = renderUI({ #creates State select box object called in ui

selectInput(inputId = "state", #name of input

label = "State:", #label displayed in ui

choices = as.character(unique(countyData$State)),

# calls unique values from the State column in the previously created table

selected = "Delaware") #default choice (not required)

})

output$county_selector = renderUI({#creates County select box object called in ui

data_available = countyData[countyData$State == input$state, "County"]

#creates a reactive list of available counties based on the State selection made

selectInput(inputId = "county", #name of input

label = "County:", #label displayed in ui

choices = unique(data_available), #calls list of available counties

selected = unique(data_available)[1])

})

output$plot1 = renderPlot({ #creates a the plot to go in the mainPanel

map('county', region = input$state)

#uses the map function based on the state selected

map('county', region =paste(input$state,input$county, sep=','),

add = T, fill = T, col = 'red')

#adds plot of the selected county filled in red

})

})#close the shinyServer

##Section 4____________________________________________________

shinyApp(ui = ui, server = server) #need this if combining ui and server into one file.

See the wonder live at shinyapps.io