Research

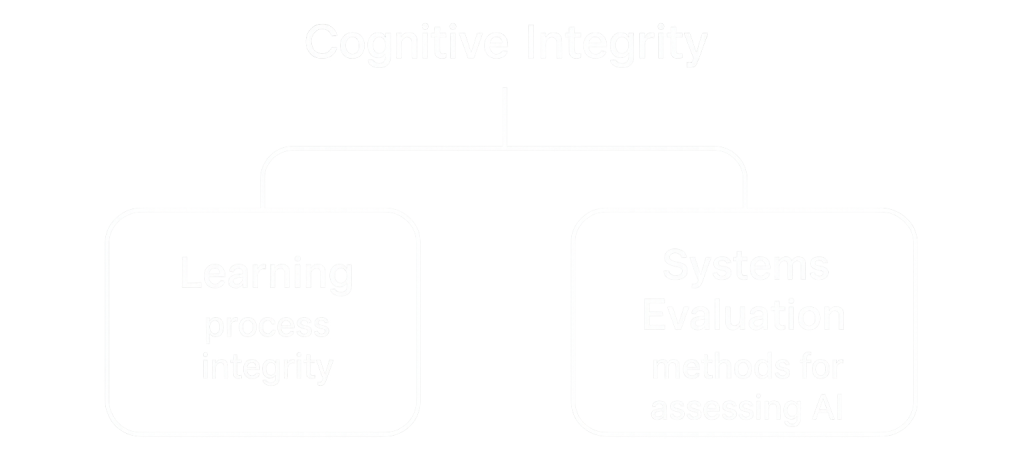

Our work develops along two connected strands.

- Learning

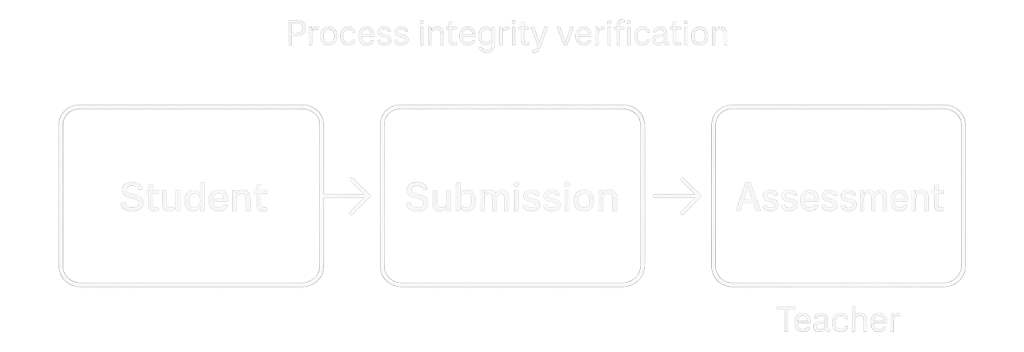

Essays carry the signatures of a student’s thought process: how a thesis develops, how ideas connect, how revisions show reflection. When students rely on generative AI, those signatures can be simulated on the page but disconnected from the student’s own reasoning.

The Lab is building a way to verify these signatures remain authentic and connected to the student’s cognitive work. Instead of grading the submission, the system asks whether students can re-enter and navigate their reasoning. Through small, conversational prompts, students paraphrase claims, add examples, revise transitions, and reflect on their choices. The aim isn’t to catch cheaters, monitor students, or replace teachers, but to confirm that the essay still reflects the student’s thinking in action, that the cues in the writing still “light up” when the student is guided to engage with them.

This approach helps protect the essay as a space for learning, while also laying the groundwork for new tools and protocols that support human agency more broadly.

- Systems Evaluation

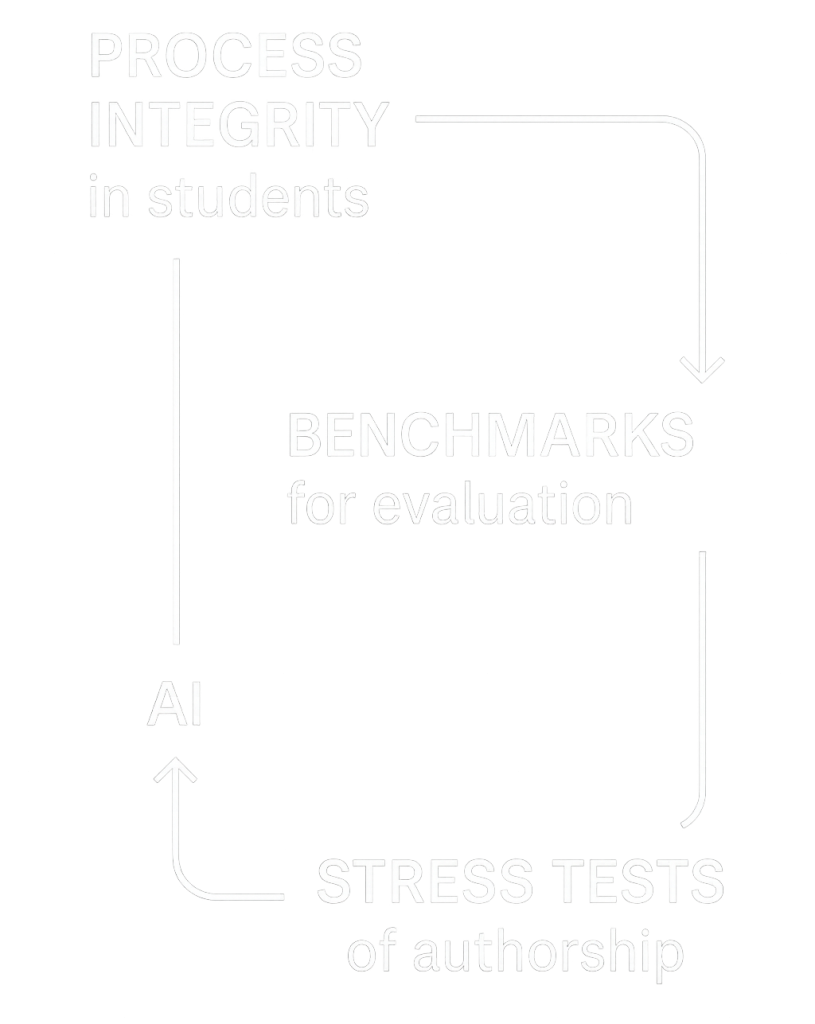

The same principles that help verify process integrity in a classroom essay also open a path for evaluating AI systems themselves. Instead of assessing AI only by accuracy of individual output, we ask how it affects human thinking over time: does it support authorship, reflection, and judgment, or does it erode them?

This creates a virtuous loop. Insights from student verification become benchmarks for testing AI systems, and those tests refine how we protect student work. The result is a foundation for studying cognitive mediation: how people engage with their own work and interact with others, with AI in between.

⸻

Why This Matters

When authorship blurs and reflection weakens, freedom of thought erodes, whether in student essays, professional work, or civic discourse. AI is neither just a tool nor a true collaborator but a cognitive environment that is already recalibrating how we think and interact. Our research develops ways to maintain human agency within that environment. By establishing principles for cognitive integrity, we’re creating protocols that empower individuals and institutions to integrate AI on their own terms.

© 2025 Cognitive Integrity Lab · Temple University